The future of AI isn't just intelligent; it's meticulously regulated.

The European Union's Artificial Intelligence Act is poised to fundamentally reshape how AI systems are developed, deployed, and governed, especially those deemed "high-risk."

While much discussion centers on the legal and ethical implications, the rubber truly meets the road when engineering teams have to translate regulatory prose into tangible, technical requirements. Compliance isn't a one-time audit; it's an ongoing operational necessity that demands real-time visibility and technical infrastructure. To establish the full operational and technical framework, read our Definitive Guide to AI Governance.

Things you’ll learn:

- Why compliance is continuous in a shifting regulatory landscape.

- Specific technical requirements for high-risk AI systems.

- How data lineage proves EU AI Act compliance.

- Building real-time technical visibility for adaptability.

Compliance isn't a checkbox

Think of regulatory compliance less like a static blueprint and more like navigating a living, breathing organism. The EU AI Act, while significant, is the beginning, not the end. We'll see amendments, evolving standards, and potentially different approaches in other jurisdictions (like the US or UK). This creates a continuously shifting landscape.

In such an environment, static compliance reports or point-in-time assessments quickly become outdated. What was compliant yesterday might not be tomorrow. To truly adapt, organizations need end-to-end visibility across their AI systems – from the data inputs and model training pipelines to the deployment environment and operational performance.

This holistic, real-time view isn't just about knowing where your data is or what your model is doing; it's about understanding the context and flow of how your AI system operates in practice. This continuous visibility is the only way to identify deviations, flag potential non-compliance issues as they emerge, and demonstrate adaptability as regulations evolve.

From legal text to technical to-do list

The EU AI Act places stringent obligations on providers of high-risk AI systems. These requirements, while phrased in regulatory language, have direct technical implications for engineering teams responsible for building and maintaining these systems. Let's break down some key areas:

- Risk management system: Requires identifying, analyzing, and mitigating risks throughout the AI system's lifecycle.

- Technical requirement: Implement continuous monitoring of performance metrics, drift detection (data concept, model), outlier detection, and potential failure points. Systems must log exceptions and anomalies for analysis.

- Data governance and management: Focuses on using high-quality, relevant, and representative datasets, free from errors and bias, for training, validation, and testing.

- Technical requirement: Implement automated data quality checks, bias detection frameworks, data validation pipelines, and detailed logging of data provenance and transformation steps used in training and operation.

- Technical documentation: Providers must draw up detailed documentation about the system, its capabilities, limitations, and components.

- Technical requirement: Develop automated processes for generating and maintaining technical documentation derived directly from the codebase, model parameters, training data metadata, and operational logs. This needs to be living documentation, updated as the system evolves.

- Transparency and provision of information: Requires that users understand how the AI system works, its intended purpose, and its limitations.

- Technical requirement: Design systems with inherent logging of inputs, outputs, and decision-making paths (where explainability is feasible and required). Develop technical interfaces or mechanisms to provide users with clear information about the AI's operation and outputs in context.

- Human oversight: High-risk systems must be designed to allow for effective human oversight during operation.

- Technical requirement: Build technical interfaces or dashboards that provide humans with the necessary information (system performance, potential risks, input data context) to understand the situation and effectively monitor or intervene. Logs must capture human actions and override decisions.

- Accuracy, robustness, and cybersecurity: High-risk systems must perform consistently, be resilient to errors or attacks, and be secure.

- Technical requirement: Implement rigorous and continuous testing frameworks (including adversarial testing), performance monitoring dashboards with real-time alerts for degradation, and security monitoring specific to the AI model and its infrastructure.

These aren't abstract goals; they translate into specific coding practices, logging requirements, monitoring infrastructure, and data pipeline designs that engineering teams must implement and maintain continuously.

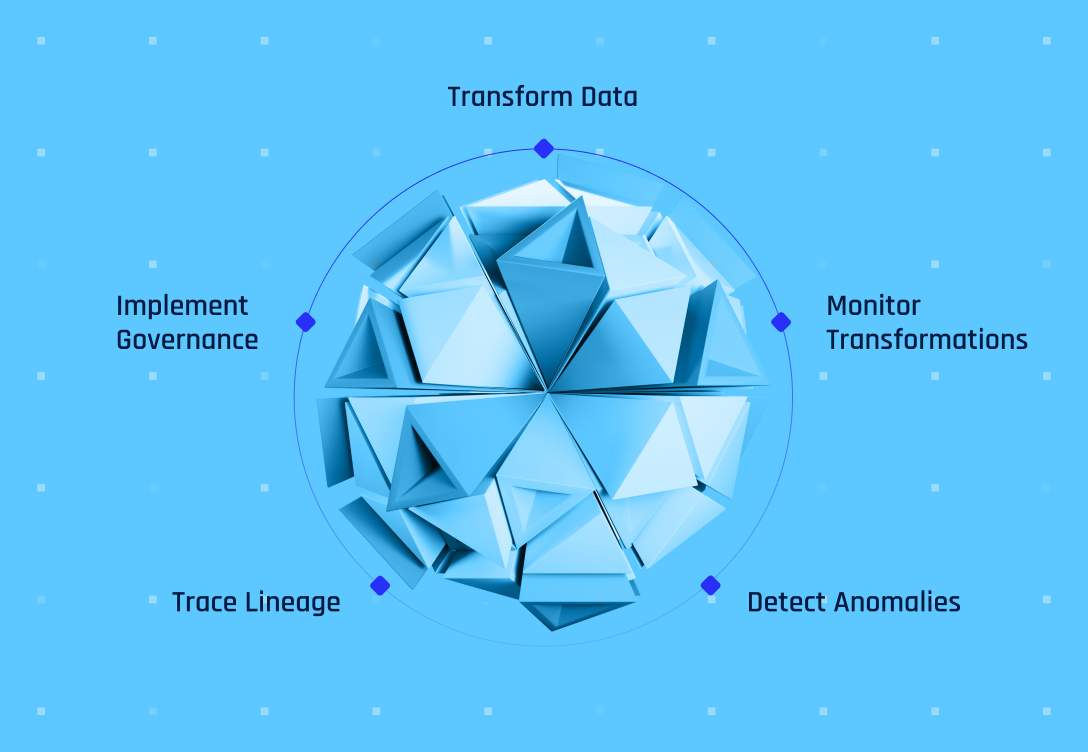

Proving compliance through data lineage

One technical requirement that underpins many of the points above, particularly data governance and transparency, is robust data lineage. Data lineage provides a clear, auditable history of data – showing where it came from, how it was transformed, what systems it passed through, and crucially, what data was used to train, test, validate, and operate a specific AI model.

For EU AI Act compliance, data lineage isn't just good practice; it's technical proof. It allows organizations to:

- Demonstrate data governance: Show auditors that the data used met quality, relevance, and representativeness requirements by tracing its origin and transformation.

- Ensure transparency: Provide a technical basis for explaining which data influenced a model's training or a specific operational outcome.

- Support human oversight: Give humans context by tracing the data inputs that led to an AI's output or recommendation.

- Facilitate auditing: Provide a clear, navigable trail for compliance officers and external auditors to verify adherence to data-related requirements.

Building and maintaining this level of technical visibility, tracing data from source to model output across complex, dynamic systems, represents a significant technical challenge. It requires sophisticated tools and processes that go far beyond manual spreadsheets or fragmented logs.

Meeting these complex technical requirements, especially for high-risk systems, demands sophisticated, real-time tools that go beyond manual processes. This is precisely where platforms like Relyance AI offer a solution.

Relyance AI automates data mapping and inventory using ML/NLP to discover, classify, and map data flows across your ecosystem, providing a comprehensive, real-time view. This automation delivers the end-to-end visibility and crucial data lineage needed to understand your data landscape and demonstrate compliance for data governance and transparency.

Furthermore, it compares obligations with operational reality and provides AI-powered risk insights to proactively spot potential issues. The result? Significantly reduced manual effort (up to 95%), minimal errors, and ensured continuous, real-time compliance with regulations like the EU AI Act.

Navigating the future of compliant AI

The EU AI Act is setting a global precedent for regulating artificial intelligence. For organizations developing or using high-risk AI, the technical requirements are clear: continuous monitoring, robust data governance, transparent operations, and verifiable data lineage are non-negotiable.

Proactive preparation, focusing on building the necessary technical infrastructure for real-time compliance, is not just about meeting regulatory deadlines; it's about building trustworthy AI systems that operate safely, ethically, and accountably in a world that increasingly demands it.

The future of AI depends on our ability to manage its complexity with technical precision and regulatory diligence.

To see how these compliance requirements fit into a holistic AI governance strategy, explore our AI Governance Guide.