What if your AI isn't just wrong, but dangerously, subtly wrong?

Artificial intelligence is no longer a futuristic concept; it's integrated into critical business processes, powering everything from customer interactions to financial decisions and medical diagnoses. When these systems work flawlessly, they deliver incredible value.

But when they stumble, the consequences can be severe – financial loss, reputational damage, legal headaches, and erosion of trust. Ignoring the possibility of AI failure isn't an option; preparing for it is essential. This is the core of AI Incident Response. For a complete look at establishing your proactive foundation, download our Definitive Guide to AI Governance.

Things you’ll learn:

- How to detect potential AI incidents before they cause significant damage using early warning systems.

- Why tracing the root cause of AI failures quickly requires robust data lineage.

- How automated workflows can connect detection to faster, more effective resolution.

- The core components needed to shift from reactive cleanup to proactive AI incident response.

Building AI early warning systems

For too long, dealing with system failures meant waiting for something to break dramatically before investigating. This "post-incident analysis" approach is like waiting for a house to burn down before installing smoke detectors. In the complex world of AI, where failures can be subtle (biased outputs, performance drift, unexpected predictions) or catastrophic (system outages, incorrect critical decisions), a reactive stance is simply untenable.

Effective AI incident response begins with shifting left – detecting potential incidents before they cause significant damage. This requires continuous, real-time monitoring of AI system behavior and the data flowing through them. It's about setting up early warning systems that can spot anomalies like:

- Degradation in model performance metrics.

- Drift in the distribution of input data or predictions compared to training data.

- Unexpected spikes or drops in output values.

- Changes in model fairness or bias scores over time.

- Issues with data quality or completeness entering the system.

By continuously observing these signals, organizations can identify potential problems in their infancy, allowing for intervention while the issue is still manageable, not a full-blown crisis.

The power of AI data lineage

Even with early warning systems, incidents can and will occur. When they do, time is of the essence. Pinpointing why an AI system failed in a complex, interconnected environment can feel like finding a needle in a digital haystack.

Was it an issue with the input data? A problem with model training? A bug in the deployment code? An infrastructure hiccup?

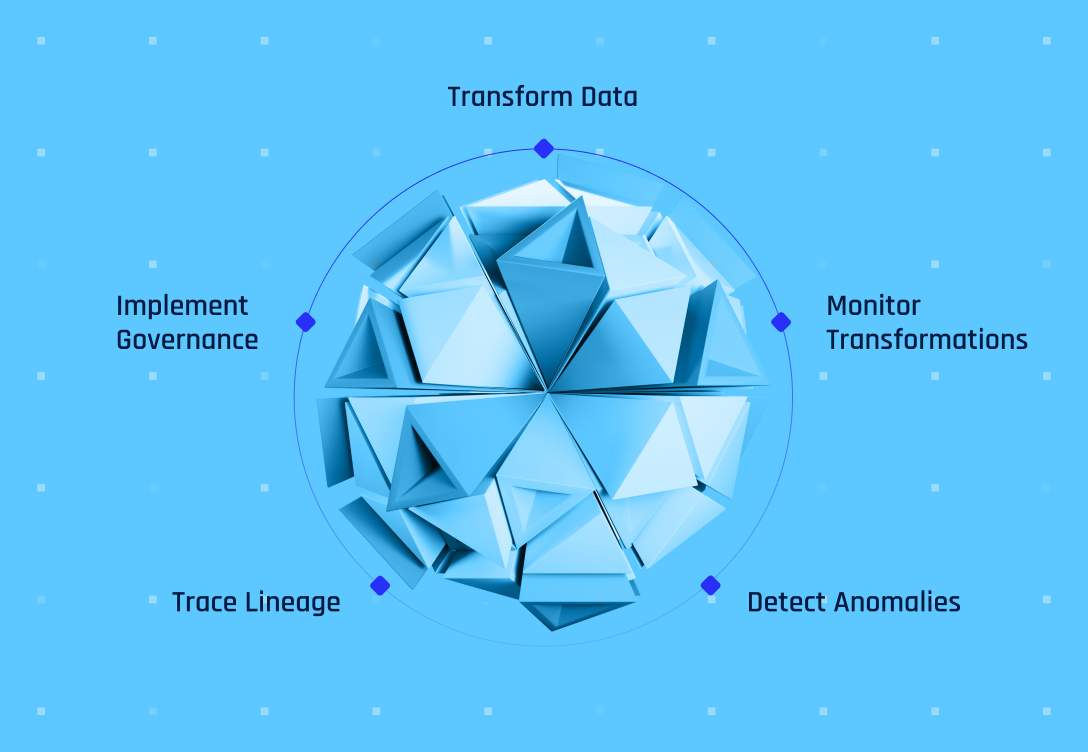

Tracing the root cause of AI failures requires deep visibility into the system's lifecycle. End-to-end data lineage is the forensic tool for this process. It provides a clear, visual map of how data moves from its source, through transformations, into the AI model, and out to its final destination.

When an incident is detected, having robust data lineage allows teams to rapidly:

- Trace the specific data inputs that led to the erroneous output.

- Identify which version of the model processed the data.

- See what upstream systems or processes provided potentially faulty data.

- Understand the dependencies between different components in the AI pipeline.

This ability to quickly trace the flow not only isolates the problem area but also accelerates the process of understanding what went wrong, dramatically reducing the time spent on manual investigation and guesswork.

Streamlining AI incident resolution

Detecting an incident and understanding its root cause are crucial steps, but they are only valuable if they lead to swift and effective action. A disconnect between detection systems and engineering/operational processes can leave organizations paralyzed, knowing there's a problem but lacking the automated workflows to fix it efficiently.

Automated remediation workflows bridge this gap. They connect the insights from early warning systems and root cause analysis directly to the actions needed for resolution. This can include triggering automated processes such as:

- Generating tickets and assigning them to the relevant engineering teams.

- Alerting data science teams to potential model drift requiring retraining.

- Automatically isolating faulty data streams to prevent further corruption.

- Rolling back to a previous stable version of the model or code.

- Notifying impacted users or stakeholders according to pre-defined protocols.

Automating these steps minimizes human intervention during high-pressure situations, reduces the risk of manual errors, and drastically cuts down the time it takes to mitigate the incident's impact, protecting business operations and preserving user trust.

How Relyance can work with it

Building such a robust AI incident response strategy requires foundational capabilities that provide clear visibility into the data fueling your AI. While Relyance AI isn't an AI model monitoring tool itself, its strength lies in automating the critical, often manual, process of data mapping and inventory.

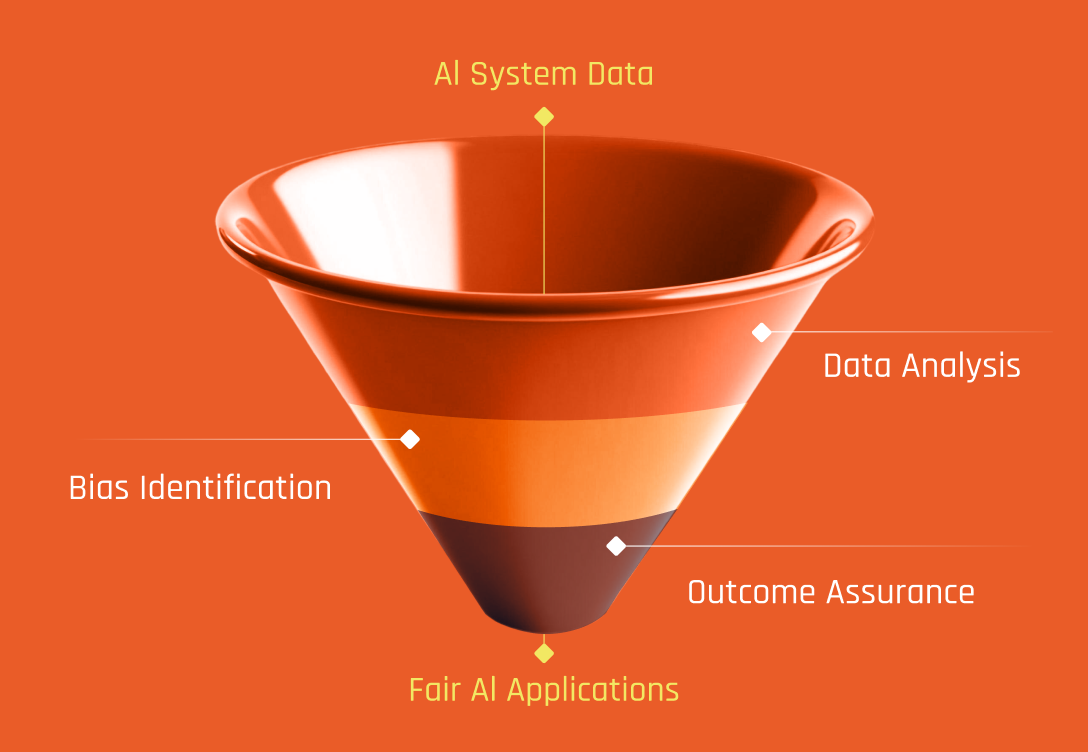

Many AI failures stem from issues related to the data – bias, quality, privacy violations, or using data for unintended purposes. Relyance AI's ability to provide a real-time, accurate map of data flows, classify data automatically, and ensure compliance with obligations offers a vital layer of defense against these data-centric AI incidents.

Furthermore, its comprehensive data lineage capabilities can be invaluable during the root cause analysis phase, helping trace problematic data back to its source and providing essential context when data quality or governance issues are suspected.

Final notes

AI incident response isn't a luxury; it's a necessity for organizations responsibly deploying artificial intelligence at scale. Moving from a reactive stance to one that emphasizes early detection, rapid root cause analysis fueled by data lineage, and automated remediation workflows is paramount.

By building these capabilities, companies can navigate the inherent risks of AI, mitigate failures quickly when they occur, and build resilient, trustworthy systems that deliver on their transformative promise. The future belongs to those who don't just build AI, but build it to fail gracefully and recover rapidly.

For a complete roadmap on building governance structures that prevent incidents, respond effectively, and maintain trust, explore our AI Governance Guide.