AI Governance: From Principles to Practice

Secure and govern all AI—sanctioned, shadow, and agentic. Eliminate blind spots from model discovery to risk assessment, ensuring continuous compliance so your teams can deploy AI at speed.

Featured

Automating AI documentation and moving beyond manual questionnaires

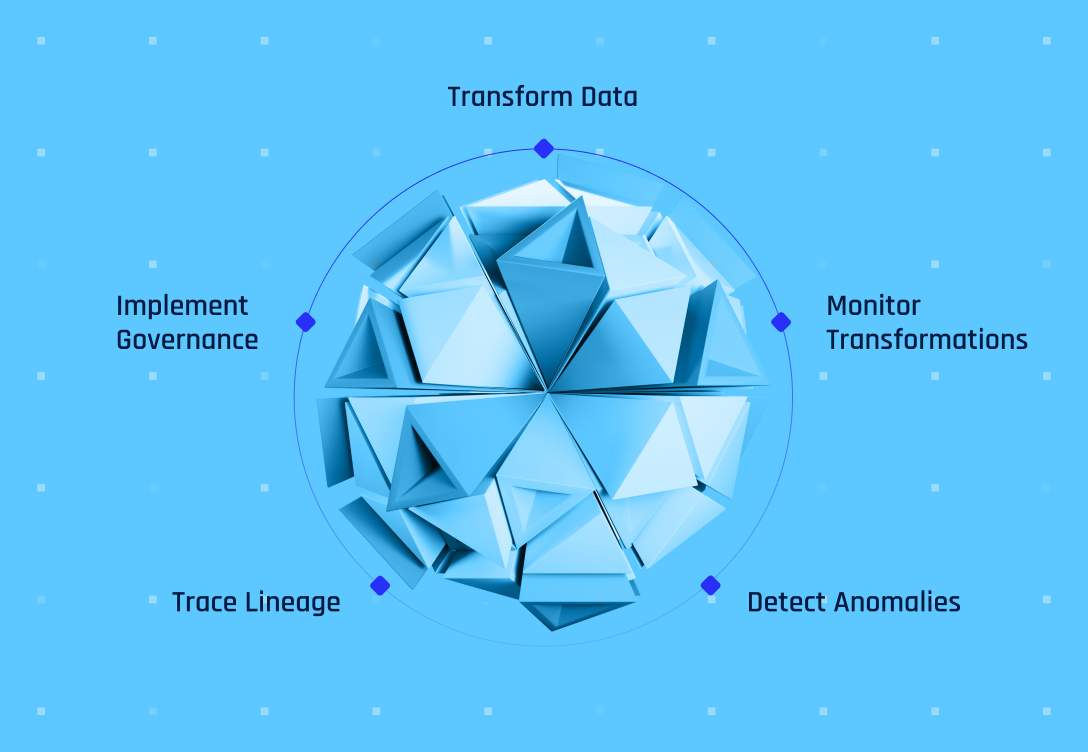

The hidden risk of transformed data in AI models

Effective AI governance begins with data flow monitoring

Explore related resources

Automated AI bias detection without manual assessments

How proactive AI incident response protects your future

Preparing for the EU AI act—technical requirements for real-time compliance

Watch: Track fast-moving sensitive data

AI Governance FAQ

What is AI governance and why do companies need it?

AI governance is a comprehensive framework of policies, processes, and oversight mechanisms that ensures organizations develop and deploy artificial intelligence systems safely, ethically, and in compliance with regulations. It functions like corporate governance but addresses AI-specific challenges including model bias, data security, and algorithmic accountability.

Companies need AI governance in 2025 because regulatory deadlines like the EU AI Act are now enforceable with steep penalties for non-compliance, AI deployment has moved from experimental to mission-critical operations, and stakeholders increasingly demand transparency and responsible AI practices. With over 90% of organizations increasing AI investment but less than 15% having mature governance programs, this gap represents significant reputational, financial, and compliance risk. Effective governance provides the guardrails that enable teams to innovate safely while managing bias, drift, security vulnerabilities, and regulatory obligations.

What are the main requirements of the EU AI Act for high-risk AI systems?

The EU AI Act imposes strict obligations on providers of high-risk AI systems across five key areas. Organizations must establish a continuous risk management system throughout the entire AI lifecycle, implement rigorous data governance to ensure training data meets quality standards and minimize discriminatory outcomes, and create comprehensive technical documentation before market deployment.

Additionally, high-risk systems must be designed for transparency and effective human oversight, allowing operators to intervene, override, or shut down the system when necessary.

Finally, Article 15 mandates that systems achieve appropriate levels of accuracy, robustness against errors and faults, and cybersecurity protections. For general-purpose AI models, critical compliance deadlines hit August 2, 2025, requiring providers to maintain technical documentation, disclose model capabilities and limitations, establish copyright policies, and publish training data summaries.

How do you measure and reduce AI risk in production models?

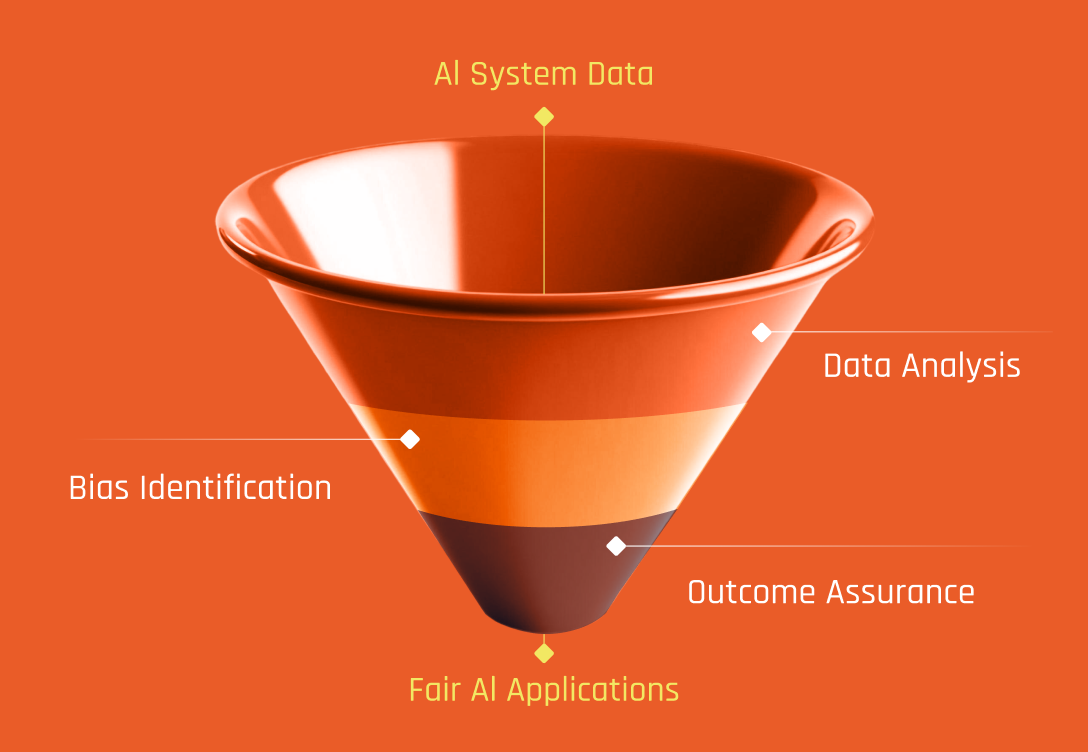

Measuring and reducing AI risk requires systematic monitoring across three critical dimensions: bias, drift, and security. For bias detection, organizations use statistical fairness metrics to compare model outcomes across demographic segments and identify discriminatory patterns before they cause harm, then apply mitigation techniques like data re-sampling or prediction adjustments.

Model drift monitoring tracks both concept drift (when prediction accuracy degrades) and data drift (when input data distributions shift), triggering model retraining on recent data when thresholds are exceeded. Security assessment involves testing for threats including adversarial attacks, data poisoning, and model extraction, then hardening systems through adversarial training and privacy-enhancing technologies. Effective risk management requires real-time monitoring tools that continuously watch for performance degradation, automated alerting systems, and a structured incident response plan to address issues quickly before they impact users or violate compliance requirements.

.png)