The AI Data Ultimatum

Why 8 in 10 consumers are ready to revolt

New research from Relyance AI reveals the trust crisis threatening your business

Do consumers trust how companies handle data in AI systems?

No. And they're ready to act.

The ultimatum in numbers

1,000+ consumers told us exactly what they expect. Here's what they'll do if you can't deliver.

82% See AI data loss-of-control as a serious personal threat

- This isn't an abstract concern, but it's a personal fear: 43% say AI data loss-of-control is "very serious" to them personally, making this an existential business issue, not a compliance checkbox

- Reputation risk is immediate: When 8 in 10 customers perceive your AI as a personal threat, every deployment carries reputational liability

- The trust baseline has collapsed: Only 18% don't see AI data control as a serious problem. Your starting point is universal consumer distrust

76% Would switch brands for transparency, even at higher cost

- Transparency is now a premium feature: More than 3 in 4 consumers will pay more for verified AI data practices. This is a revenue opportunity, not just risk mitigation

- Price is no longer the primary lever: Half of consumers (50%) will choose transparency even if it means forgoing the lowest price, fundamentally reshaping competitive dynamics

- First-mover advantage is massive: The first company in your industry to prove transparency captures 76% of the addressable market willing to switch

81% Suspect undisclosed AI training on their personal data

- Assumption of guilt is universal: 4 in 5 consumers believe you're training AI on their data without telling them, whether you are or not, you're already convicted in their minds

- Disclosure is mandatory, not optional: You must prove you're NOT misusing data, not just promise good practices

- Legal and regulatory exposure accelerates: When 81% believe the same violation is occurring, class actions and enforcement actions follow

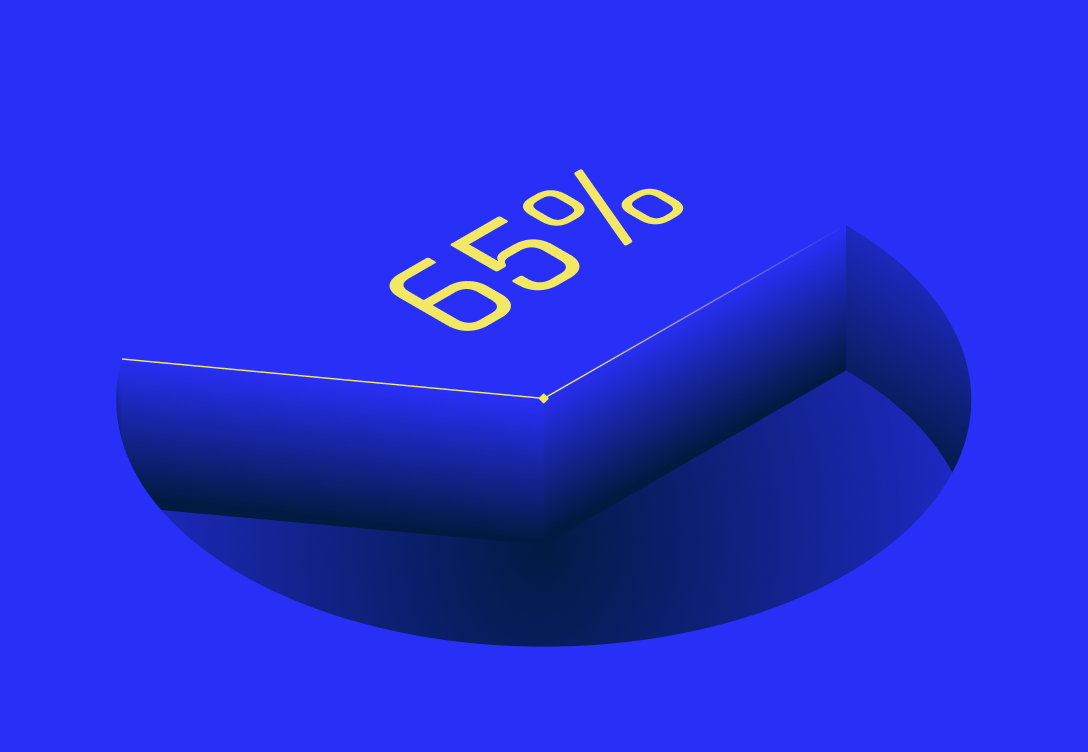

84% Would abandon or restrict companies over AI opacity

- Immediate behavioral consequences: This isn't "future churn risk", 84% will take action immediately when companies admit they can't explain data usage

- Complete abandonment is common: 57% would stop using your product entirely (not just limit sharing), this is revenue elimination, not reduction

- The middle ground is collapsing: Only 16% would continue with no changes, neutrality is dead, you're either transparent or abandoned

80% Concerned about personal data threats during holidays

- Peak season = peak scrutiny: Nearly 80% enter holiday shopping worried about data threats. Your busiest revenue period is your highest-risk moment

- Digital exposure is unavoidable: With most holiday shopping moving online, consumers feel exposed and are watching how you handle the surge in data collection

- Holiday stress amplifies AI concerns: More than two-in-three consumers are already stressed and unexcited about holiday shopping. AI data anxiety compounds this negative baseline

How the trust crisis hits your industry

Same consumer ultimatum. Different business consequences.

Show them you're listening

Your customers demand proof, not promises. AI Governance Expert discovers shadow AI, traces every data journey, and delivers the transparency 76% would switch brands for.

Get the complete findings

All survey data, detailed methodology, demographic breakdowns, and key insights from 1,000+ consumers. See the full story behind the statistics.

FAQ

Do consumers trust AI with their personal data?

No. Our 2024 survey of 1,000+ Americans found that 82% see AI data loss-of-control as a serious personal threat to them, including 43% who say it's "very serious."

This isn't abstract concern or future worry—consumers perceive AI data handling as an immediate, serious, personal threat right now. Only 18% don't view this as a serious problem, meaning your baseline assumption must be: nearly every customer fears you've lost control of their data.

Why don't consumers trust companies with AI data?

The survey reveals three compounding factors:

- Universal suspicion of secret training (81%) — More than 4 in 5 consumers believe companies are using their personal data for undisclosed AI training. This isn't a minority concern—it's the dominant assumption.

- Perceived loss of control (82% see as serious threat) — Consumers believe companies can no longer track where data goes inside complex AI systems, creating the perception that data is "loose" and unprotected.

- Holiday season digital exposure (nearly 80% concerned) — Consumers know most shopping happens online, creating unavoidable data collection. They enter peak shopping season already anxious about data threats.

- Emotional stress compounds concern — More than two-in-three Americans begin holiday shopping stressed and unexcited. AI data anxiety amplifies this negative baseline.

The throughline: Consumers assume the worst about your AI practices. Proving otherwise is now mandatory.

Would consumers pay more for AI transparency?

Yes. 76% would switch brands for personal data transparency, even if it costs more.

Even more striking: 50% will choose transparency even if it means forgoing the lowest price.

This fundamentally reframes AI transparency:

- Not a cost center—a revenue driver: Half your market will pay premium prices for verified AI practices

- Not a compliance issue—a competitive weapon: 76% switching potential means transparency is the primary basis of competition

- Not optional—table stakes: When 3 in 4 customers demand it, transparency becomes mandatory for market participation

For enterprises, this means: Budget for AI transparency should come from growth/revenue, not security/compliance.

What happens if companies can't explain AI data usage?

The behavioral response is swift and severe. 84% would react to opacity with abandonment or restriction:

- 57% would stop using the company entirely — Complete customer loss, not just reduced engagement

- 27% would continue but limit data sharing — Degraded AI performance, reduced personalization, lower customer value

- Only 16% would continue with no changes — You cannot build a sustainable business on this segment

The implication: Admitting opacity is commercial suicide. If you can't explain data usage, don't admit it publicly—fix it first, then disclose.

But silence isn't safety: 81% already suspect you're hiding something. The only winning move is proving transparency before questions become accusations.

What do consumers want from companies using AI?

Based on survey priorities, consumers demand:

- Proof you haven't lost control — 82% see data loss-of-control as a serious threat; they want verification you can track every data point through every AI system

- Disclosure of AI training practices — 81% suspect undisclosed training; they want explicit confirmation about what data feeds which models

- Real-time visibility and detection — Consumers want to know you can catch problems as they happen, not discover them in breach notifications months later

- Transparency worth paying for — 76% would switch for it; 50% would pay more for it—consumers want transparency so meaningful they'd change brands to get it

Notably, consumers don't want promises—they want proof. "We take privacy seriously" doesn't work when 81% assume you're lying.

How does holiday shopping affect AI data concerns?

Holiday shopping creates a perfect storm of AI data anxiety:

Nearly 80% of Americans are concerned about threats to their personal data during holiday shopping season. This concern peaks when:

- Transaction volumes spike — More purchases = more payment data, shipping addresses, purchase history flowing into AI systems

- Digital exposure is unavoidable — Most holiday shopping happens online; consumers can't avoid data collection

- Emotional stress is already high — More than two-in-three begin the season stressed and unexcited; data anxiety compounds negative emotions

The business implication: Your busiest revenue period (Q4 holiday shopping) is your highest AI transparency risk period. The 84% who would abandon opacity are most likely to act when stress is highest and data collection is most visible.

The opportunity: "Holiday shopping you can trust—we prove where your data goes" becomes the message that captures stressed, concerned consumers looking for a safe place to shop.

What makes transparency valuable enough to switch brands?

The survey reveals transparency must be:

- Verifiable, not promised — 81% already suspect companies lie about training data; promises don't work anymore

- Comprehensive, not selective — 82% fear loss of control; showing transparency for one AI system while hiding others fails

- Continuous, not point-in-time — Consumers want ongoing verification, not one-time audits

- Tangible, not abstract — "We value privacy" is meaningless; "Here's exactly where your checkout data went: collection → fraud detection model → encrypted storage → deleted after 90 days" is valuable

The 76% who would switch aren't looking for marketing claims—they're looking for companies that can prove data control with the same rigor you'd prove financial controls to auditors.

What methodology was used for this AI trust survey?

Relyance AI conducted this research in partnership with Truedot.ai, surveying 1,000+ U.S. consumers aged 18+ in December 2025.

Sample composition:

- Nationally representative across age, gender, income, and geography

- Mix of high and low AI adoption users

- Fielded during pre-holiday shopping season to capture attitudes during peak consumer data exposure

Survey design: The survey examined:

- Perception of AI data control and personal threat levels

- Suspicion of undisclosed AI training practices

- Behavioral responses to opacity (abandonment, restriction, switching)

- Willingness to pay premium for transparency

- Holiday shopping context and heightened data concerns

Platform: Fielded using Truedot.ai's consumer trust survey technology, designed specifically to measure authentic consumer attitudes (not hypothetical preferences).

Margin of error: ±3.2%

You may also like

Why alert fatigue is a design flaw, not an operational problem

Why periodic scans miss real-time threats: the case for continuous tracking