Artificial intelligence is no longer a futuristic concept, it's a core business tool driving efficiency, innovation, and growth. But with great power comes great responsibility and significant risk. This is where AI governance enters the picture. It’s the essential framework of rules, policies, and processes that ensures your organization develops and deploys AI systems safely, ethically, and in compliance with a rapidly growing web of regulations. Strong AI governance isn't just about avoiding fines, it's about building trust with customers, managing reputational risk, and unlocking the full, sustainable value of AI.

This guide provides the strategic blueprint you need to navigate the new era of AI with confidence.

Things you'll learn:

- Understand key regulations like the EU AI Act and standards like the NIST AI RMF.

- Learn how to find and fix top AI risks, including model bias, drift, and security flaws.

- Discover who to put on your AI governance committee and what their roles should be.

- Find out which tools you need to automate governance and monitor your AI systems effectively.

What is AI governance?

At its heart, AI governance is a system of oversight for artificial intelligence. Think of it like corporate governance for finance or HR, but specifically tailored to the unique challenges of AI. It’s a structured approach that an organization uses to direct, manage, and monitor its AI activities, ensuring they align with its ethical principles and business goals. A mature program is built on a foundation of AI governance best practices that guide every decision.

It’s not just a single policy. It’s a comprehensive program that makes sure AI systems operate in a way that is fair, secure, and accountable. One of the core pillars of this is ensuring stakeholders can understand and trust the system's decisions, which requires a strong commitment to AI explainability.

Ultimately, robust AI governance provides the guardrails that allow data science and business teams to innovate freely but safely. It answers critical questions like: How do we ensure our AI isn't discriminating against certain groups? How can we explain a decision made by a complex algorithm? By having a formal system in place, you move from a reactive approach to a proactive, strategic one.

Why is AI governance important in 2025?

The urgency for establishing formal AI governance has never been higher. In 2025, we've moved past the experimental phase of AI into deep operational dependency. This rapid adoption has brought inherent risks into sharp focus, making governance a non-negotiable business function for several critical reasons.

Responding to regulatory pressure

Landmark regulations are no longer on the horizon; their deadlines are here. With steep penalties for non-compliance, achieving EU AI Act Compliance is a top priority for any organization operating in or serving the European market. A structured governance program is the only viable path to meeting these complex legal mandates.

Managing reputational and financial risk

A single incident of a biased algorithm or a security breach can cause immense reputational damage and financial loss. Understanding the common AI governance challenges and implementing a program to address them proactively is the primary defense against these high-stakes failures.

Controlling AI proliferation

Businesses are deploying hundreds, sometimes thousands, of models. Without a central framework, this creates a chaotic environment. Effective AI inventory management is the first step to bringing order to this complexity, providing a single source of truth for all AI assets in the organization.

Building stakeholder trust

Customers, investors, and employees are increasingly aware of AI's potential pitfalls. Demonstrating a commitment to responsible AI through a transparent governance program is becoming a key competitive differentiator and a prerequisite for building the long-term trust needed for sustainable growth.

Fact-file: The Governance Gap

A recent industry study found that while over 90% of organizations are increasing their investment in AI, less than 15% have a mature, fully operational AI governance program in place. This gap represents a significant and growing area of enterprise risk.

What is an AI governance framework (NIST AI RMF explained)?

An AI governance framework is a structured set of guidelines that helps an organization manage AI risks systematically. One of the most influential is the NIST AI Risk Management Framework (AI RMF 1.0). It's not a rigid checklist but a flexible, voluntary framework that helps organizations structure their thinking around AI risk.

Govern

This function is the foundation, involving the creation of a risk-aware culture and the establishment of policies and roles for AI oversight. It ensures that risk management is a shared, enterprise-wide responsibility.

Map

This function is about context and discovery. Teams "map" out an AI system’s purpose, its potential impact, and its risk landscape. This is where you catalog your AI use cases and conduct impact assessments.

Measure

Here, you analyze and assess the risks identified in the Map stage. This involves using qualitative and quantitative methods to get a clear picture of the risk profile. Defining the right AI governance metrics is crucial for making this function effective and for tracking risk reduction over time.

Manage

Based on the results of the Measure function, this is where you take action. This function is all about having a systematic approach to AI Risk Management. You prioritize the most significant risks and implement strategies to mitigate, transfer, or accept them, followed by continuous monitoring to ensure those strategies remain effective.

What are the core requirements of ISO/IEC 42001?

While NIST AI RMF is a voluntary framework, ISO/IEC 42001:2023 is a formal, certifiable management system standard. It specifies the requirements for establishing, implementing, and continually improving an AI Management System (AIMS).

The standard's core requirements include:

Leadership and context

Organizations must define the scope of their AIMS and get commitment from top management, who must establish a formal AI policy and assign key roles and responsibilities.

Planning and risk assessment

This involves conducting an AI system impact assessment to understand the potential consequences for individuals and society, and then planning actions to address identified risks.

Support and resources

An effective AIMS requires allocating necessary resources, ensuring personnel are competent, and fostering awareness of the AI policy across the organization.

Operations and performance evaluation

This is the implementation phase. Organizations must plan and control the processes for the entire AI lifecycle. This requires a robust program for AI Model Lifecycle Governance to ensure every system is managed according to policy from development to retirement. This is then followed by internal audits and management reviews.

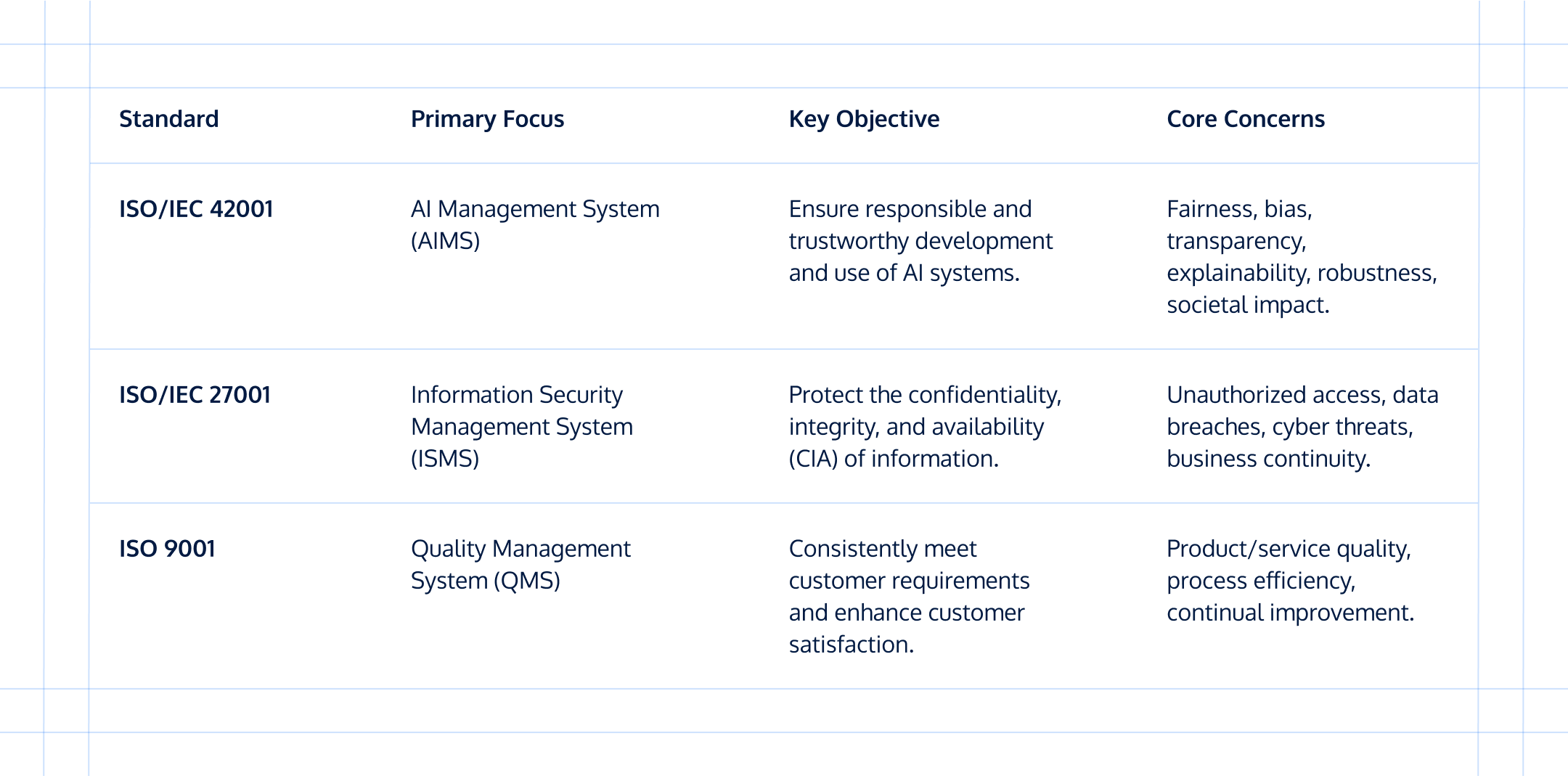

How does ISO 42001 compare to ISO 27001 and other management systems?

For organizations familiar with standards like ISO/IEC 27001 (Information Security), understanding how ISO 42001 fits in is straightforward. It’s designed to be compatible, sharing the same high-level structure (Annex SL) as other major ISO management systems, which allows for easier integration.

The key distinction

The simplest way to view the difference is:

- ISO 27001 is about protecting your data assets from harm.

- ISO 42001 is about protecting people and society from harm caused by your AI systems.

How does AI governance align with the EU AI Act’s high-risk obligations?

The EU AI Act’s strictest rules apply to high-risk AI systems. For these systems, a robust AI governance program is a direct legal requirement.

If you are a provider of a high-risk AI system, you must have the following in place:

- A risk management system: Article 9 requires you to establish, document, and maintain a continuous risk management system throughout the AI system's entire lifecycle.

- Data and data governance: Under Article 10, the data used to train, validate, and test the AI system must meet strict quality criteria. This necessitates a formal approach to AI Data Management to ensure that data governance practices are sufficient to minimize risks and discriminatory outcomes.

- Technical documentation and record-keeping: You must create detailed technical documentation before placing the system on the market. These new requirements mean organizations must refine their approach to creating and managing AI documentation to ensure it is compliant, comprehensive, and always up-to-date.

- Transparency and human oversight: Systems must be transparent and designed for effective human oversight, allowing a person to intervene, override, or shut down the system to prevent or minimize risks.

- Accuracy, robustness, and cybersecurity: Finally, Article 15 mandates that systems perform at an appropriate level of accuracy and be resilient against errors, faults, and cyber threats.

What legal obligations hit providers of general-purpose AI models on 2 August 2025?

The EU AI Act created a special category for General-Purpose AI (GPAI) models. A critical deadline for providers of these models is August 2, 2025, by which they must comply with a specific set of transparency and documentation obligations.

Core obligations for all GPAI models

By the deadline, all GPAI model providers must:

- Create and maintain detailed technical documentation.

- Provide information on the model's capabilities and limitations to downstream providers.

- Establish a copyright policy and make a detailed summary of the training data publicly available.

Stricter rules for systemic risk models

GPAI models deemed to pose systemic risk face even stricter obligations, including model evaluations, adversarial testing, and reporting serious incidents to the AI Office.

What does a full AI-governance lifecycle look like and where do teams get stuck?

A mature AI governance program is a continuous lifecycle integrated into every stage of AI development.

- Ideation & scoping: Governance begins with an initial risk screening and impact assessment to align the project with ethical principles from the start.

- Data sourcing & preparation: Here, governance ensures data is sourced ethically and is of high quality. Understanding the full history of the data requires robust AI data lineage practices, while preparing it for a model involves carefully managed AI data transformation to prevent introducing bias.

- Model development & training: Data scientists build and train the model with governance guidelines ensuring fairness and robustness are built in from the start.

- Testing & validation: The model is rigorously tested against predefined metrics. An independent review by a governance committee may be required.

- Deployment & integration: Once approved, the model is pushed into production with clear user instructions and transparency notices.

- Monitoring & maintenance: This critical, ongoing phase requires Real-Time AI Monitoring to continuously watch for performance degradation, drift, or new biases. If an issue is detected, a structured AI incident response plan ensures it is addressed quickly and effectively.

- Retirement: When a model is no longer needed, a formal process ensures it is decommissioned securely, with all documentation archived for compliance.

How do you measure and reduce AI risk?

Measuring and mitigating AI risk is the technical core of governance. This involves actively testing for and fixing issues in your models, focusing on three critical areas.

Measuring and mitigating bias

AI bias occurs when a model produces systematically prejudiced results. Effective AI bias detection relies on a suite of statistical fairness metrics to compare model outcomes across demographic segments and identify discriminatory behavior before it causes harm.

- Mitigation: Employ techniques like pre-processing (re-sampling data) or post-processing (adjusting predictions).

Detecting and managing drift

Model drift is the degradation of a model's performance over time.

- Measurement: Monitor for concept drift (accuracy drops) and data drift (input data changes).

- Management: Retrain the model on more recent data, triggered by alerts from automated monitoring platforms.

Assessing and hardening security

AI models are software with unique vulnerabilities.

- Assessment: Test for threats like adversarial attacks, data poisoning, and model extraction.

- Hardening: Improve security through adversarial training and privacy-enhancing technologies (PETs).

What roles and responsibilities make up an effective AI-governance committee?

An AI governance committee is a cross-functional team that provides central oversight for an organization's AI strategy and execution. An effective committee includes diverse expertise to ensure decisions are balanced and well-informed.

- Executive sponsor: Champions the program, secures budget, and ensures alignment with business strategy.

- AI governance lead / AI ethics officer: Chairs the committee, manages daily operations, and serves as the central point of contact for all AI governance matters.

- Legal & compliance counsel: Monitors the regulatory landscape, advises on legal risks, and reviews AI systems for compliance.

- Lead data scientist / ML engineering lead: Provides technical expertise, advises on practical implementation, and helps translate policy into developer guidelines.

- IT & cybersecurity lead: Assesses and manages the security risks of AI systems, ensures infrastructure is secure, and oversees defenses against cyber threats.

- Business unit / product representative: Represents the business units deploying AI, providing essential context on the use case, its goals, and its real-world impact.

Which tools and platforms support operational AI governance?

As AI uses scales, manual governance becomes impossible. A dedicated technology stack, including a range of AI Governance Tools, is essential to operationalize policy and automate compliance.

AI governance & risk management platforms

These are the central hubs for an AI governance program. They provide an automated model inventory, risk assessment workflows, and reporting dashboards. Many are focused on delivering AI governance automation to replace manual, error-prone spreadsheets and documents with integrated, efficient systems.

Model observability & monitoring tools

These tools focus on the post-deployment phase, continuously monitoring live models for performance degradation, drift, and bias.

MLOps platforms

These platforms manage the end-to-end machine learning lifecycle. Many are now integrating governance features like model versioning and approval workflows.

Privacy enhancing technologies (PETs)

This category of technologies enables data analysis and model training while protecting data privacy, helping resolve the conflict between data-hungry models and privacy obligations.

Case studies & lessons

Theory is useful, but seeing how AI governance works in the real world provides the most valuable insights. These condensed case studies illustrate common failure points and the power of a structured governance response.

Case study 1: the biased loan application model

- The challenge: A financial services firm deployed an AI model to automate loan application screening. An internal audit revealed the model was disproportionately rejecting qualified applicants from minority neighborhoods, using proxy variables like spending habits as a stand-in for protected attributes.

- The governance solution: The AI governance committee immediately paused the model. A formal audit was conducted, bias mitigation techniques were applied to the training data, and a continuous monitoring system was implemented to track fairness metrics in real-time.

- The key lesson: Bias is often unintentional and hidden in proxy variables. Proactive auditing and real-time AI monitoring are non-negotiable.

Case study 2: the insecure healthcare diagnostic tool

- The challenge: A hospital wanted to use a third-party AI tool to help diagnose diseases from medical images. The legal team raised concerns about sending sensitive Protected Health Information (PHI) to a vendor's cloud server.

- The governance solution: The committee's vendor risk policy mandated a solution that could be deployed on-premise. They used privacy-enhancing technologies (PETs) so the model could be improved using the hospital's data without the raw data ever leaving their secure environment.

- The key lesson: Robust AI data management and governance policies enable the safe adoption of innovative technologies, they don't have to be a barrier.

Key takeaways from real-world cases

These scenarios reveal a few universal truths about AI governance:

- Proactive is better than reactive: The most expensive failures happen when governance is an afterthought.

- Data is the biggest risk: The quality, representativeness, and security of your training data are the most common points of failure.

- Human oversight is irreplaceable: Even the most advanced AI requires a formal process for human intervention and final decision-making, especially in high-risk contexts.

These examples are just the beginning. To see more in-depth scenarios covering different industries and risk types, explore our complete library of AI governance examples.

Next steps

The age of unmanaged, experimental AI is over. As we move further into 2025, the convergence of powerful new technologies, rising stakeholder expectations, and binding regulations has made AI governance an essential pillar of modern business strategy. It is the critical enabler for any organization that wants to innovate with artificial intelligence responsibly, sustainably, and competitively.

Implementing AI governance is not a simple, one-off task. It is a continuous journey that requires commitment from leadership, collaboration across departments, and the right combination of people, processes, and technology. By starting now to build your inventory, establishing your committee, and adopting a risk-based framework like the NIST AI RMF you can build the muscle memory for responsible AI.

The path forward involves moving from reactive, ad-hoc measures to a proactive, systematic program that embeds ethics and control into every stage of the AI lifecycle. The frameworks, tools, and strategies outlined in this guide provide a comprehensive blueprint for that journey. The time to build your AI governance program is now.