What if your AI's impressive accuracy hides a regulatory landmine?

Artificial intelligence is transforming industries, driving innovation at an unprecedented pace. We celebrate models with higher accuracy, better prediction rates, and faster processing. But beneath the surface of these engineering triumphs lurks a growing tension: the gap between how AI works technically and why it makes specific decisions in a way that satisfies regulators, customers, and the public.

This is where AI Explainability enters the picture, it isn’t optional — it’s the essential bridge between rapid innovation and regulatory accountability. Relyance AI’s Definitive AI Governance guide provides the strategic blueprint you need to navigate the new era of AI with confidence.

Things You’ll Learn:

- Why technical AI metrics often fail compliance scrutiny.

- How data transformations create hidden explainability gaps.

- The power of automated context for true AI understanding.

- How to achieve explainability without slowing innovation.

Engineering metrics vs. compliance needs

Data science and engineering teams thrive on quantifiable metrics. Accuracy, precision, recall, F1 scores – these tell us how well a model performs its task. They are crucial for iteration and improvement.

However, when regulators, auditors, or even concerned customers ask questions, they aren't interested in the F1 score. They want to know:

- Why was this individual denied a loan?

- On what basis was this candidate flagged?

- Is the model biased against a protected group?

- Can you prove the data used was appropriate and handled according to regulations like GDPR or CCPA?

These questions demand transparency and justification, falling squarely under the umbrella of AI Explainability. Relying solely on performance metrics leaves organizations dangerously exposed. A model can be highly accurate overall yet systematically biased in ways that violate fairness standards or data privacy regulations.

The technical validation simply doesn't translate to the language of risk management and legal compliance. This disconnect isn't just theoretical; it represents significant financial and reputational risk.

The blind spot of derived data and feature engineering

Compounding this challenge is the complexity hidden within AI pipelines. Raw data rarely feeds directly into a model. Instead, it undergoes significant transformation through feature engineering – creating new input variables from existing ones, combining fields, normalizing values, or deriving insights. This process is vital for improving model performance.

However, many traditional AI Explainability techniques (like LIME or SHAP) often focus on explaining the model's behavior based on these final, engineered features. While useful, this approach can create a critical blind spot. It might tell you which engineered feature influenced a decision, but it often fails to trace that influence back through the complex transformations to the original raw data source.

Why is this a problem? Because compliance demands often relate to the raw data itself – its provenance, its sensitivity, and the permissions associated with its use. If you can only explain the model based on derived features, you've lost the crucial context needed to demonstrate, for example, that sensitive personal data wasn't inappropriately used to influence an outcome.

True AI Explainability requires understanding the entire data lineage, not just the final step before the model's prediction.

Automating Explainability: Build Context Without Slowing Down

Does achieving genuine AI Explainability mean grinding innovation to a halt with manual documentation and painstaking reviews? Not necessarily. The key lies in building context automatically and continuously.

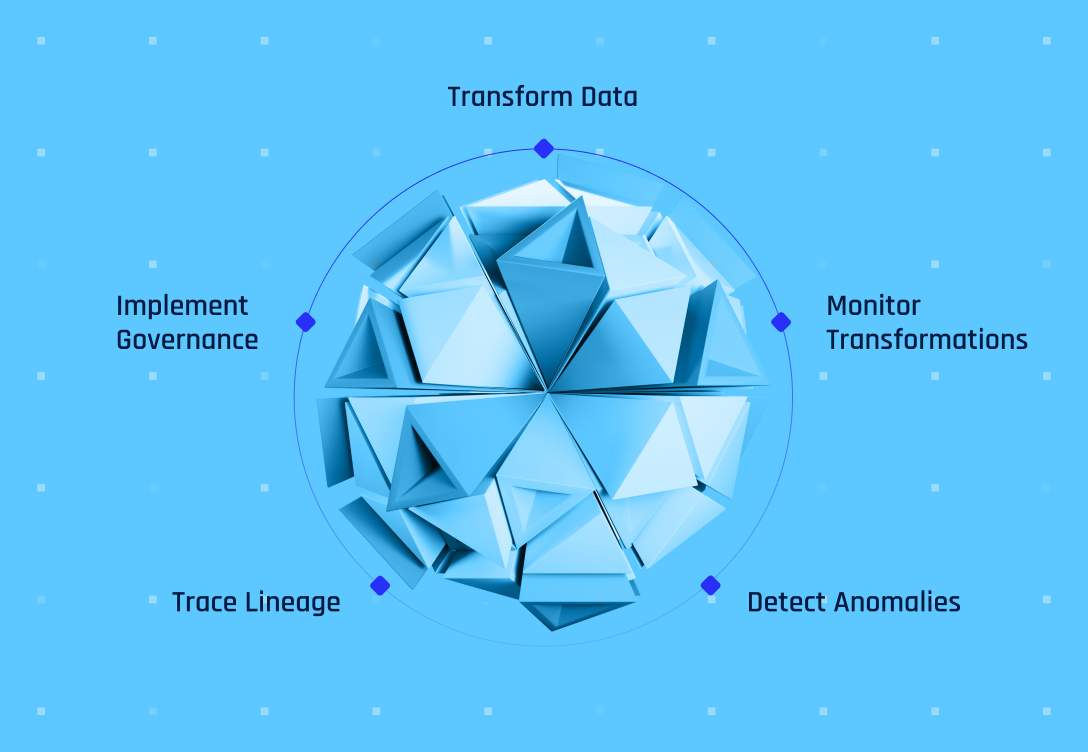

Imagine having a dynamic, real-time map of how data flows through your systems – from its initial ingestion, through every transformation and enrichment step, into the AI model, and influencing its output. By continuously monitoring these data flows, you gain the essential context needed to understand:

- Data provenance: Where did the data influencing a specific decision originate?

- Transformations: What specific steps were taken to turn raw data into model inputs?

- Sensitivity: Was personal or sensitive data involved at any stage, and was its use appropriate?

This automated, contextual understanding doesn't just support post-hoc explanations; it enables proactive governance. It allows teams to identify potential compliance risks during development and operation, embedding AI Explainability into the process rather than treating it as an afterthought.

This approach bridges the gap, allowing innovation to flourish within guardrails built on automated transparency and understanding.

Automation-driven explainability works best when aligned with broader governance processes. See how they fit together in our AI Governance Guide.

Bridging the gap with foundational understanding

Achieving this level of continuous, contextual understanding is where platforms like Relyance AI become critical. True AI Explainability relies heavily on knowing exactly what data is being used, where it came from, and how it transformed along the way.

Relyance AI provides this foundational layer by automatically discovering, classifying, and mapping data assets and flows across your entire ecosystem in real-time. It translates the complex journey of data – through code, infrastructure, applications, and vendors – into a clear picture.

This automated visibility into the operational reality of data processing provides the essential context needed to connect model behavior back to raw data origins and transformations, a cornerstone for robust AI Explainability and demonstrating compliance.

Trust is built on transparency

AI Explainability is more than just a technical challenge; it's the missing link ensuring that the incredible power of AI is wielded responsibly and ethically.

By moving beyond simple performance metrics, acknowledging the complexities of feature engineering, and leveraging automation to build continuous context, organizations can bridge the divide between rapid innovation and stringent compliance.

It's about building trust – trust with regulators, trust with customers, and ultimately, trust in the transformative potential of AI itself. The future belongs to those who can not only innovate but also explain.