There is a physics problem at the heart of modern cybersecurity, especially with AI in the enterprise. It is the reason you still feel exposed despite passing all reviews and audits.

Most security tools in your stack—DSPM, DLP, SSPM, IAM—operate in space. They answer static questions at a specific point in time: "Where is the PII/Sensitive Data Type located right now?" or "Is this S3 bucket encrypted?" or "Was this misconfiguration patched?"

But data doesn't just sit in space. It moves through time.

In the current threat landscape, breaches will hopefully rarely happen because a database was simply left open to the public (although it happens a lot too). They happen because of the flow of data. Data moves from a secure system of record, transforms through a CI/CD pipeline, lands in a SaaS application, is ingested by an AI model, and processed by one or more AI agents that then may also persist or move that data elsewhere.

If you are only looking at snapshots of data at rest through periodic scans, you are missing 80% of the enterprise data security reality in the AI era. You are auditing the bank vault while the money is being driven away in an armored car that just changed its route at runtime.

We must evolve from Data Discovery (Static) to Data Journeys (Dynamic).

Here are four specific scenarios that defeat static security controls today, visualized to show exactly why only understanding the physics of data flow (Data Journeys: visibility from code → runtime → identity → data) approach can stop them.

Scenario 1: The "Ephemeral" Infrastructure (AI Agent Autonomy)

The CISO Nightmare:

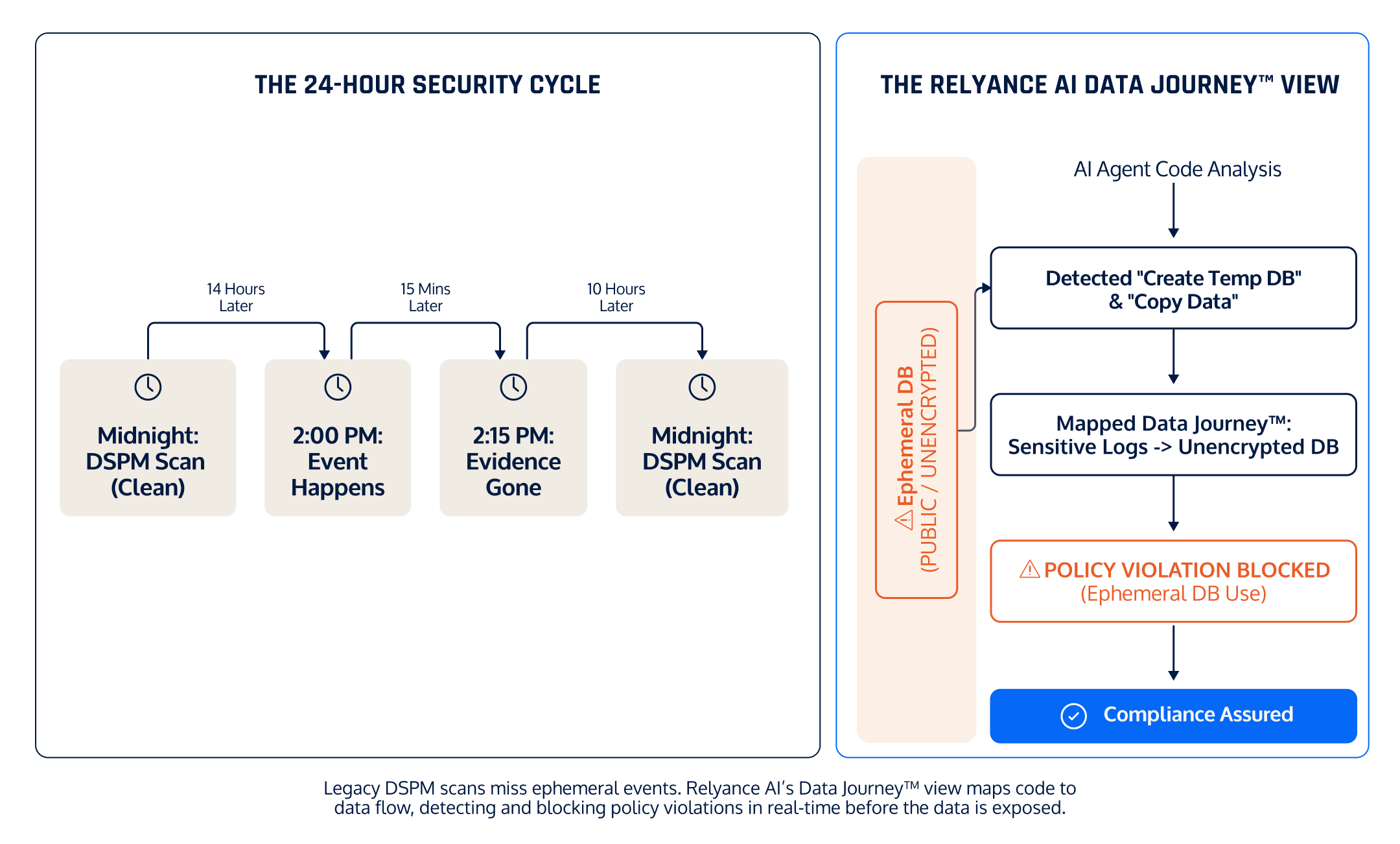

With the rise of "Agentic AI" (AI that can execute tools, not just chat), infrastructure and identity is becoming fluid. An agent can spin up a resource, use it, move or persist data, and destroy it in minutes—completely bypassing the 24-48 hour scan cycle of traditional data security tools.

You have authorized an AI Data Analyst agent to help "optimize cloud costs." A user prompts: "Analyze our Q3 logs for inefficiencies. Give me a full report on where we can save costs."

To process this massive dataset quickly, the Agent autonomously calls a tool to spin up a temporary, high-performance database cluster (or a large S3 staging bucket). It copies the sensitive logs there, runs the analysis, delivers the report, and deletes the infrastructure 15 minutes later.

Crucially, the Agent used a default Terraform module that left that temporary bucket publicly accessible for those 15 minutes.

Why Legacy Security Fails (Traditional Static DSPM):

- Timing Mismatch: Traditional Static DSPM tools rely on API polling or snapshots that typically run every 24 or 48 hours.

- The "Ephemeral" Factor: The infrastructure existed from 2:00 PM to 2:15 PM. The scanner ran at midnight.

- The Result: You had a critical data exposure event that has literally vanished from the record. Your audit report says "Green," but your data could have been exposed.

Visualizing The Gap: The Invisible Window

The Data Journey Solution:

We don't wait for a scan. With our Dynamic DSPM solution we monitor the Application and Agent Runtime. Relyance AI detects the act of the Agent calling the Create_Infrastructure tool and maps the data flowing into that ephemeral environment. We flag the policy violation (Public Bucket + Sensitive Data) in real-time based on the action, regardless of whether the bucket exists 10 minutes later.

Scenario 2: The "Unsanction AI" (Shadow AI and Governance)

The CISO Nightmare:

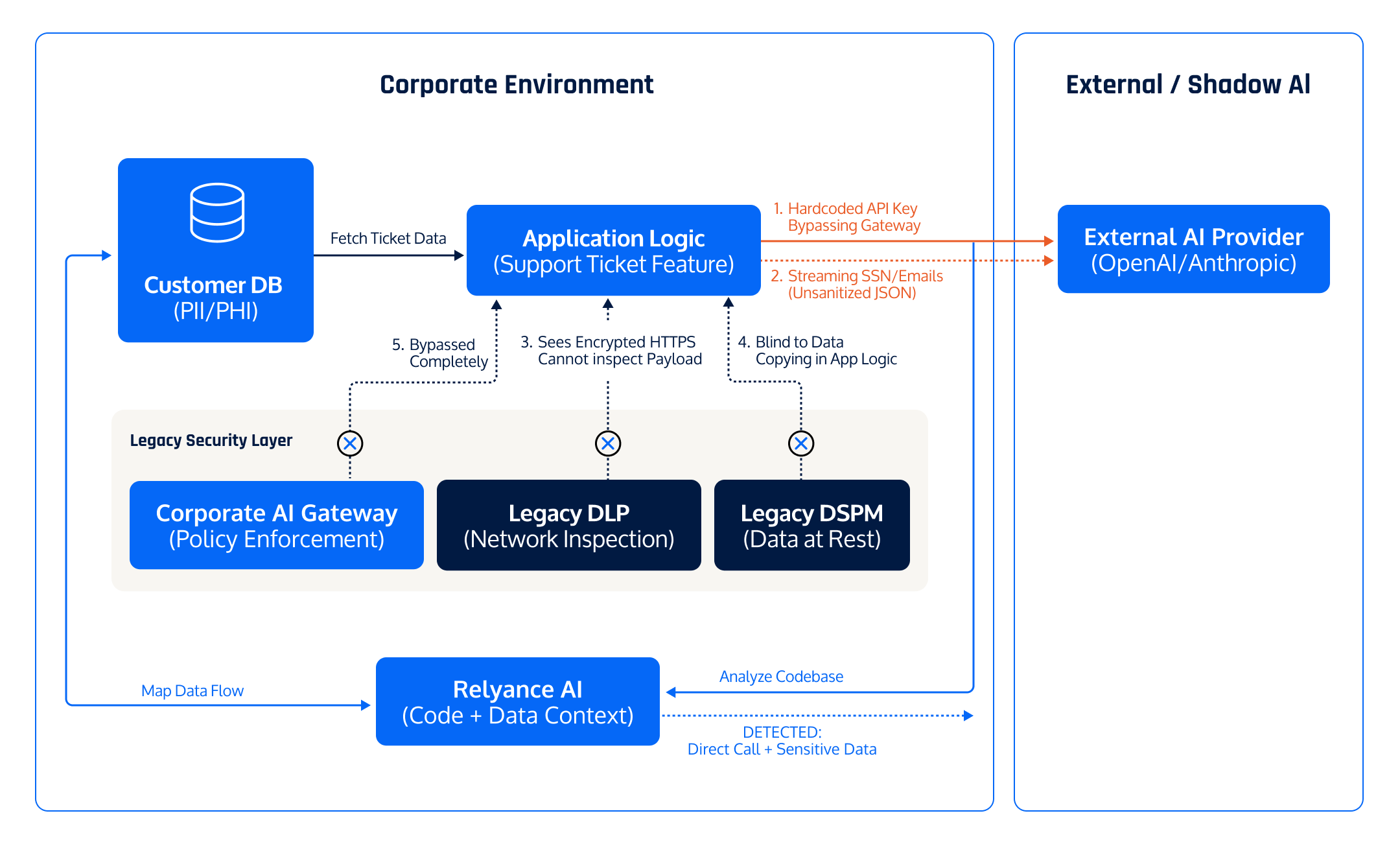

A product team is under pressure to add "GenAI features" (e.g., "Summarize this support ticket" or "Draft a response"). To move fast, a developer integrates a direct API call to an external provider (like OpenAI or Anthropic), bypassing the corporate AI Gateway.

In the code, they pass the entire customer ticket object into the prompt context to ensure the AI has "enough info." Unknowingly, they are now streaming PII (SSNs, emails), PHI, or payment data directly to a third-party AI vendor who may retain that data for 30 days or use it to improve their models.

Why Legacy Security Fails:

- DLP (Data Loss Prevention): Sees encrypted HTTPS traffic to a valid domain (e.g., api.openai.com) and creates too much noise to block. It cannot understand that the context of the JSON payload contains PII.

- AI Gateways: These only work if developers use them. If a developer hardcodes a direct API key (Shadow AI) to ship a feature quickly, the Gateway is completely bypassed.

- DSPM: Protects the data at rest in the database, but is blind to the application logic copying that data into an API call.

Visualizing The Gap:

The Data Journey Solution:

Relyance AI analyzes the Code to identify the direct API call to the LLM provider and correlates it with the Data flow to see exactly what data (PII/PHI) is being serialized into the prompt.

You detect the "Shadow Integration" immediately—identifying that sensitive customer data is flowing to an unapproved AI vendor—and can block the release or force routing through the secure Gateway before a single customer record is exposed.

Scenario 3: The "Context-less" AI Model (AI Data Leak)

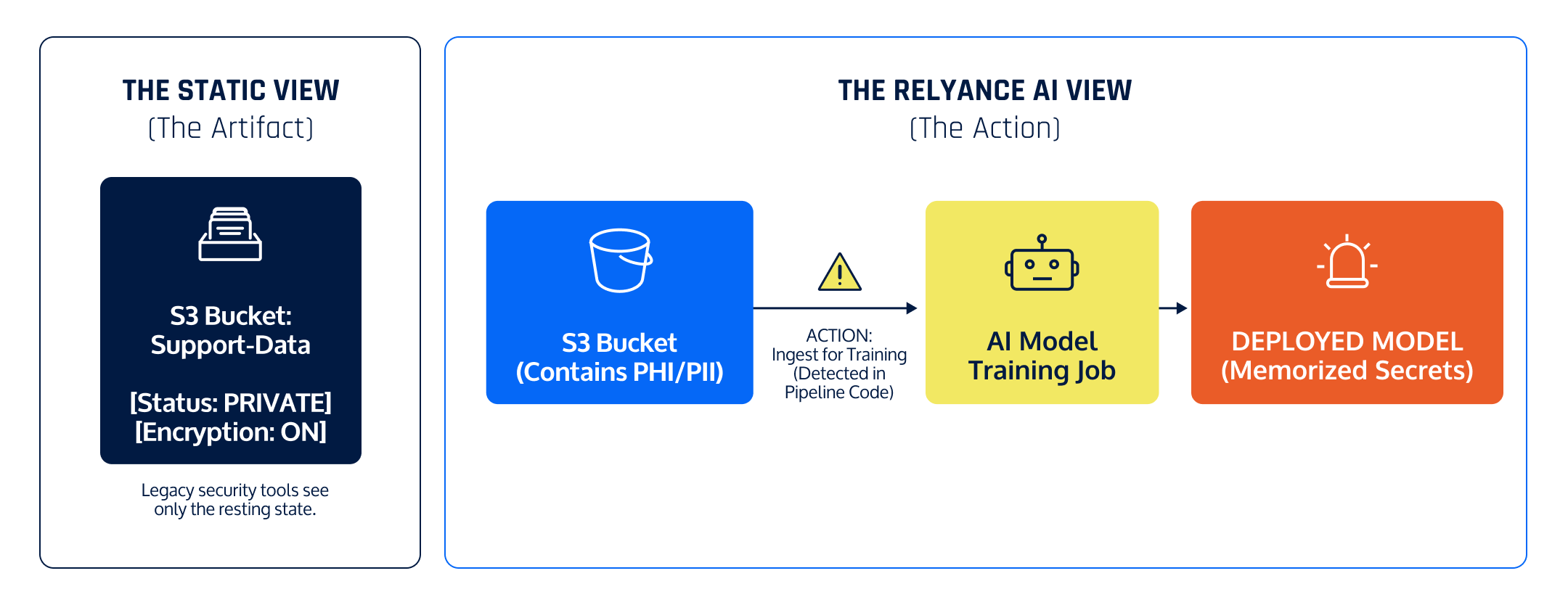

The CISO Nightmare:

Your engineering team rushes to build a GenAI support bot. They point the model training pipeline at a "compliant" S3 bucket containing support tickets. The data is secure at rest. However, that bucket contains hidden PII/PHI or worse sensitive customer information. The model ingests this, memorizes it, and can now regurgitate sensitive medical history via prompt injection.

Why Legacy Security Fails (DLP/DSPM):

DLP checks for data leaving the perimeter; it does not understand internal model training pipelines. DSPM confirms the bucket is private. Neither tool understands that "training" is a data processing action that fundamentally changes the risk profile of the data.

Visualizing The Gap:

The Data Journey Solution:

By correlating Code (The training pipeline script) with Data (The S3 Bucket contents), Relyance AI detects that sensitive data is being used for a prohibited purpose (Training)—stopping the violation before the model is created.

Scenario 4: The "Shadow Chain” Problem (SaaS AI Supply Chain)

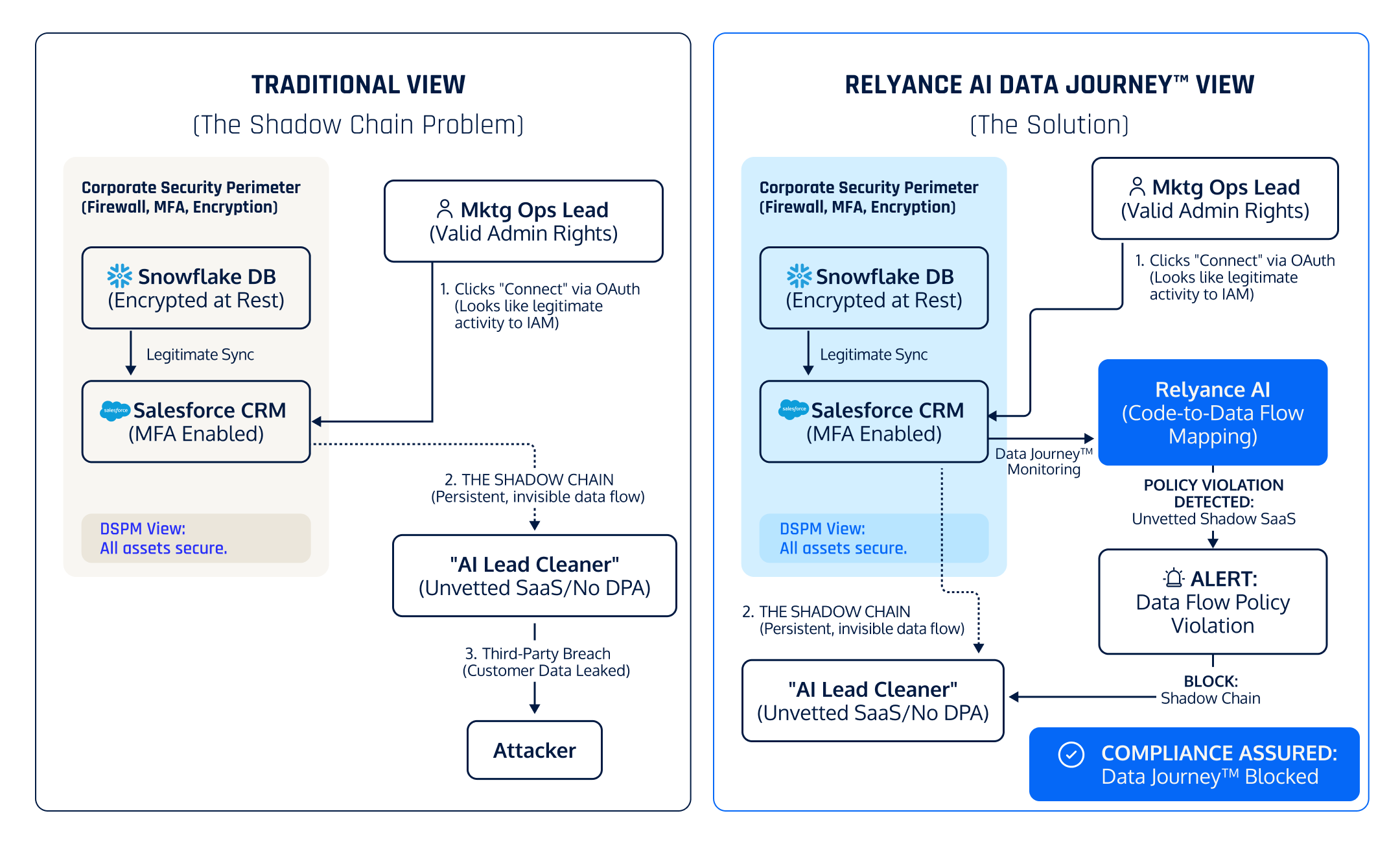

The CISO Nightmare:

You have secured the front door: Snowflake is encrypted, and Salesforce requires MFA. However, your Marketing Ops lead—who has legitimate admin rights in Salesforce—clicks "Connect" on a new AI-powered "Lead Cleaning" tool they found.

They grant it Read/Write access via OAuth. This tool now creates a persistent, invisible pipe. It begins sucking data from Salesforce (which came from Snowflake) into its own cloud. You have no contract, no DPA (Data Processing Agreement), and no visibility with this vendor. When they get breached, your customer list is indirectly leaked creating new avenues for long term downstream attacks.

Why Legacy Security Fails:

- DSPM: Sees data at rest in Snowflake and Salesforce. Both look secure.

- IdP / IAM: Sees a valid OAuth token issued to a user with valid permissions. It looks like legitimate business activity.

- The Reality: Your data has left the perimeter through a "side door" that bypasses your firewall entirely.

Visualizing The Gap: The Invisible Supply Chain

The Data Journey Solution:

We detect that data is flowing from Snowflake -> Salesforce -> Unknown_App_ID. We flag this not because the data is unencrypted, but because the lineage extends to an unauthorized third party as a New Data Flow detected.

Conclusion: Data is the new network for AI

We have entered an era of Information Asymmetry. Your developers and data scientists know how data moves and transforms; your security team only knows where it sits.The only way to bridge this gap, and provide true assurance, is to stop relying solely on point-in-time audits and start mapping the entire Data Journey.

If you can’t see the journey, you can’t secure the data. Security teams lock down infra and identity, but still lose track of sensitive data flows across the enterprise ecosystem. The pain gets 1000x worse as AI agents, code, and config changes create non-deterministic data paths daily. Data is the new network for AI.

Check out Data Journeys™ for a comprehensive demo of our platform, and request a Data Journey assessment today.