We need to talk about the number 84. This represents the percentage of your customers who will walk away, or extremely limit what they share with you, if you can't explain how their data is being used in your AI systems.

Let me say that again: 84% will take immediate action against opacity. And 57% of them won't just pull back. They'll stop using your product entirely.

No speculations. No hypotheticals. In December 2025, we got a definitive answer from 1,017 Americans when we asked them point-blank: "If a company said they don't know exactly where your data goes inside their AI systems, what would you do?"

The results should terrify every CEO, CMO, and product leader reading this.

The abandonment cascade is already happening

Here's what keeps me up at night: This behavioral shift isn't waiting for some future trigger. It's already underway.

51% of consumers have already reduced the personal information they share with companies because of AI concerns. Not "might reduce." Not "considering reducing." They have already done so.

Think about what that means for your AI systems. Every personalization engine, every recommendation algorithm, and every predictive model you've spent millions building are all getting starved of the data they need to work properly.

Your customers aren't complaining. They're not writing angry emails or leaving one-star reviews. They're just... sharing less. Opting out more. Clicking "decline" on permissions they used to approve without thinking.

And you probably haven't noticed yet.

The three levels of customer retaliation

When we asked people what they'd do if a company admitted they can't trace data through AI systems, the responses broke into three distinct tiers.

- Stop using product entirely: 57%

- Continue but limit data sharing: 28%

- Continue with no changes: 7%

- Not sure: 8%

The ghosts (57%): These people are done. That's a majority of your customer base. They will stop using your product entirely. No second chances. No waiting to see if you fix it. You admitted you've lost control of their data, and they're gone.

The restrictors (28%): These people won't leave immediately, but they'll cripple your AI capabilities by limiting what they share. They'll turn off location services, decline cookies, and opt out of personalization. You keep them as customers, but you lose the data that makes your AI valuable.

The indifferent (7%): Only 7% said they'd continue with no changes. You cannot build a sustainable business on 7% of your addressable market.

Why this time is different

I've been in marketing long enough to see a dozen "privacy is dead" panic cycles. GDPR. Cambridge Analytica. Every new iOS update that makes tracking harder.

Each time, the conventional wisdom went: "Consumers say they care about privacy, but they don't actually change behavior."

That conventional wisdom just died.

What's different? AI has made data flows invisible in a way that previous technologies didn't. When Meta used your data for ads, you could kind of understand it: "I liked a page about running shoes, so now I see running shoe ads."

But AI? Your checkout data goes... somewhere. It trains... something. It influences... some model. Where did it go? Who has access? Is it being used to train systems you never agreed to power?

Consumers can't trace it. And they're assuming the worst.

The silent spiral is destroying your AI advantage

Let me walk you through how this plays out:

Stage 1: Your customers start feeling uneasy about AI. Maybe they read a news story. Maybe they see a viral tweet. The seed of suspicion is planted.

Stage 2: They start pulling back. Not dramatically. Just a little. They stop uploading photos. They use a fake email. They decline that permission.

Stage 3: Your AI gets slightly worse. Recommendations aren't quite right. Personalization feels generic. Your models drift.

Stage 4: The product experience degrades. Customers notice your AI isn't as good as competitors'. But they don't know why.

Stage 5: They leave. Not because you did anything overtly wrong. But because your AI advantage has quietly evaporated.

This is already happening. The 51% who've reduced sharing are already in Stage 2 or 3. You just haven't connected the dots yet.

The generation gap that isn't

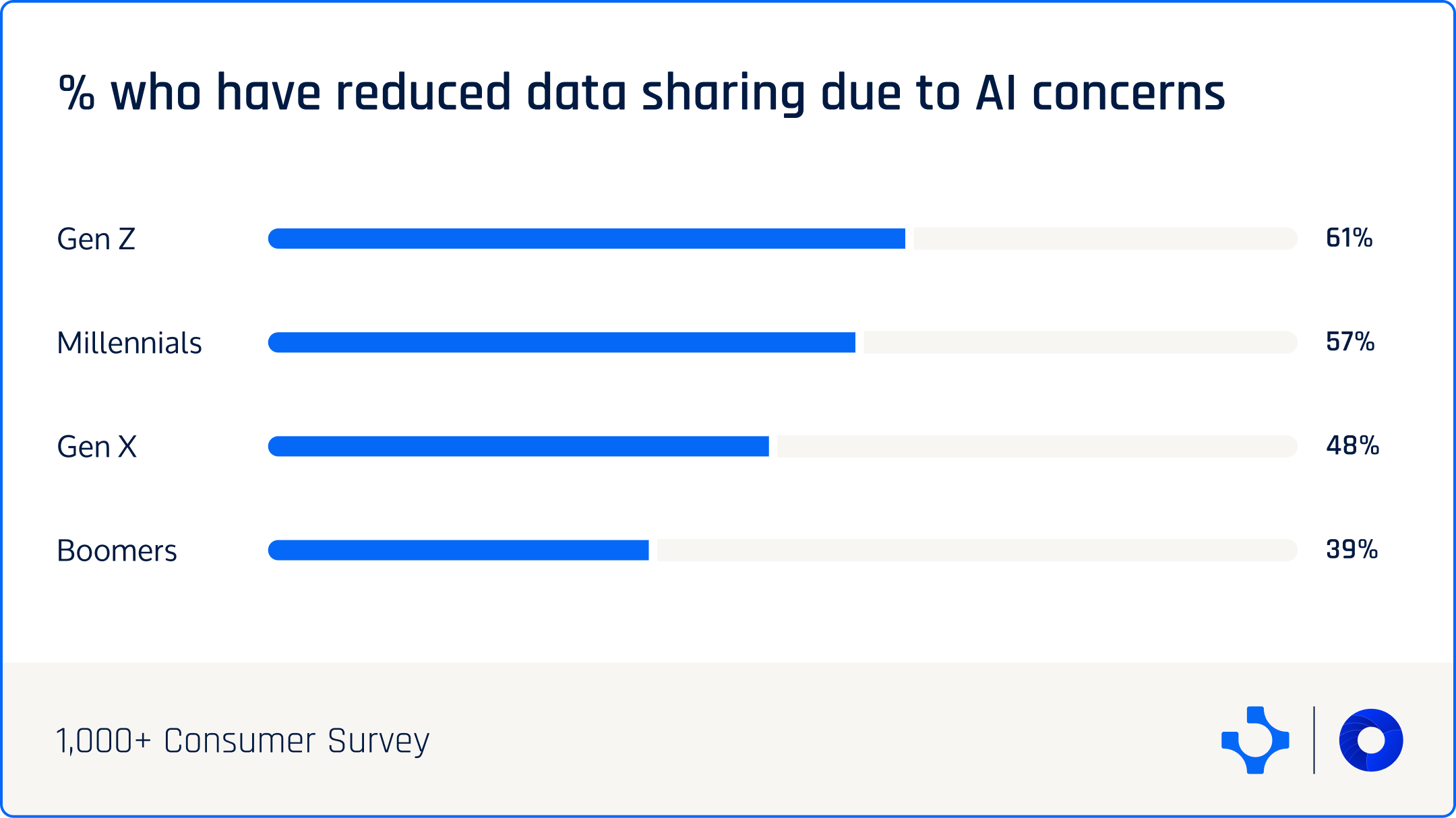

One of the most surprising findings: This isn't a young-person problem.

- Gen Z: 61%

- Millennials: 57%

- Gen X: 48%

- Boomers: 39%

Yes, Gen Z is most aggressive, but even Boomers show 39% reducing sharing with two in five of the supposedly least tech-savvy generation.

The youngest consumers aren't leading a revolution that older consumers will ignore. They're just the leading edge of a universal shift.

And here's the kicker: The people most likely to reduce sharing are your most valuable customers.

Parents: 58% have reduced sharing. These are people with disposable income making purchase decisions for entire families.

High spenders (planning to spend $1,000+ on holiday shopping): 59% have reduced sharing. Your whales. Your highest-LTV accounts.

People who've been breached before: 67% have reduced sharing. And that's 53% of all consumers.

The customers you most want to keep are most likely to pull back. The behavioral shift is concentrated exactly where it hurts most.

The lawsuit threat is real

As if immediate abandonment weren't enough, there's a secondary threat: 52% of consumers would consider joining legal action against companies that can't show where data went in AI systems.

Let's do the math. If you have 10 million customers:

- 5.2 million would potentially join a class action

- Even a modest $50 per-person settlement equals $260 million

- Plus legal fees, PR damage, regulatory scrutiny

The plaintiff's bar is salivating. They don't need to prove you misused data. They just need to prove you can't trace it. That you lost control.

"Your Honor, the defendant admits they cannot show where customer data traveled through their AI systems."

Case closed.

The opportunity in the crisis

I've spent this entire post scaring you. Let me end with something constructive.

The behavioral shift is real. But it's not irreversible.

The 84% who would abandon opacity? They're not saying "I'll never use AI products again." They're saying "I'll abandon products that can't explain their AI." That's different.

The 51% who've already reduced sharing? They're not privacy absolutists. They're rational actors responding to uncertainty. Give them certainty—real, provable transparency—and they'll share again.

The 52% ready to sue? They're not looking for a payday. They're looking for accountability. Show them you're accountable, and the threat evaporates.

The opportunity here is as big as the threat. Because while 84% will abandon opacity, 76% will switch to competitors who can prove transparency. Even at higher cost.

The same behavioral shift destroying opaque companies is creating a massive market for transparent ones.

The question is: Which side will you be on?

Will you be the company scrambling to explain why customers are quietly leaving? Or will you be the company capturing them as they switch from competitors who couldn't prove transparency?

The 84% have spoken. The behavioral shift is here.

Your move.

—————

About the Survey: The data in this post was collected by TrueDotᴬᴵ conducted in December 2025 surveying 1,017 U.S. consumers. You can download the full report “The AI Data Ultimatum” here

.png)