Your DSPM runs at midnight. It crawls your environment. It classifies data. It generates a report. By 6 AM, you have a complete picture of your security posture.

Or do you?

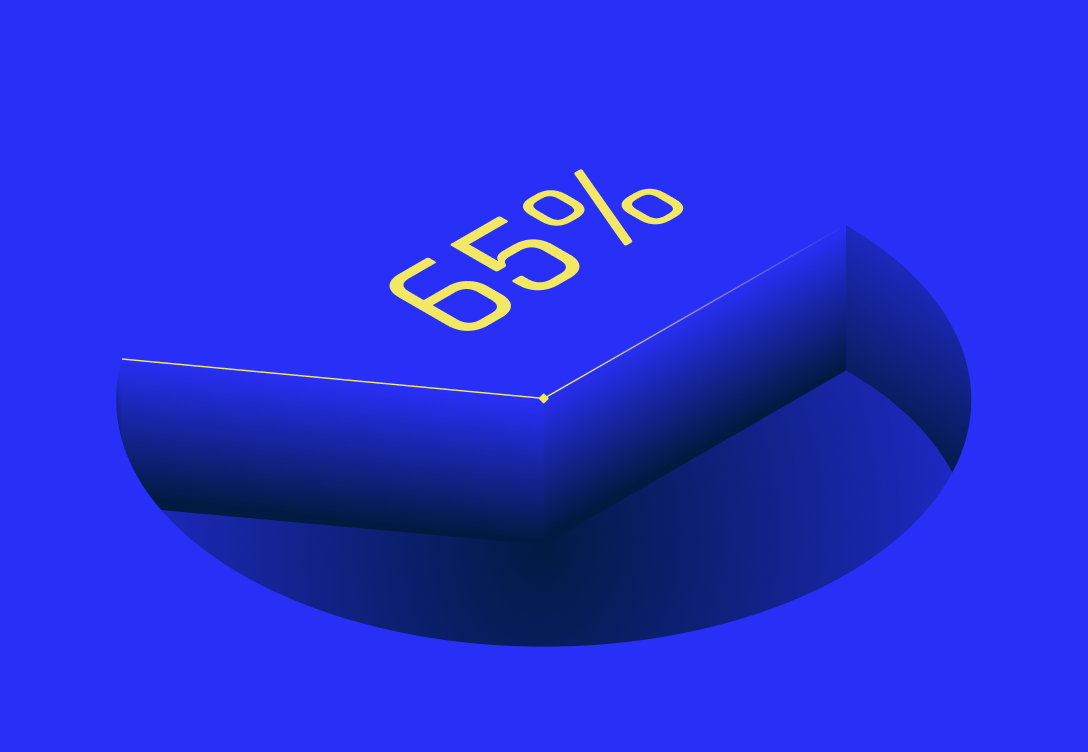

In those 24 hours, developers pushed 47 code commits. Your APIs processed 2 million requests. Your data pipelines moved 500GB. Your AI models ran 10,000 inferences.

Your scan captured none of it.

For 24 hours, you were flying blind. No visibility. No protection. Just a promise that the next scan would catch up. What if you had a 24/7 Data Defense Engineer watching every second instead?

How static scanning works

The pattern is simple: crawl, classify, report, repeat.

The scanner connects to your data stores on a schedule. It reads files and tables. It applies classification rules. It compares to the last scan. It flags changes. Then it waits for the next run. It goes idle. It takes a break. This made sense when data lived in a few databases that changed slowly. Scan nightly, catch 95% of what matters.

Modern architecture broke this model.

What happens between scans

Let's trace a typical 24-hour window. Your scanner runs at midnight. Here's what it misses:

Hours 1-8: Development.

Your team pushes code. Some commits change how the app handles user data. One adds logging that captures request payloads. Another integrates a new analytics service.

Your scanner is idle. Your 24/7 Data Defense Engineer analyzes every commit in real time.

Hours 9-12: Pipelines.

ETL jobs run. They pull data, transform it, load it somewhere else. Temporary tables with unmasked PII exist for 2 hours. By the time the scanner looks, they're gone.

Your scanner misses it entirely. Your Data Defense Engineer tracks every transformation, every temporary artifact.

Hours 13-18: AI training.

Your ML team launches a job. It pulls data from your warehouse plus external APIs.

Your scanner sees the warehouse. Not the external data flowing in. Your Data Defense Engineer maps the complete training data lineage.

Hours 19-24: Production.

Your app serves users. APIs process requests. Microservices pass data around. Logs capture details.

Your scanner sees none of this. Your Data Defense Engineer watches every data flow, every API call, every log entry.

At hour 24, the scanner runs. It finds the same databases it found yesterday. Your Data Defense Engineer found 47 new data flows, 3 potential policy violations, and 1 shadow AI integration. It already handled them.

How a 24/7 Data Defense Engineer works

A Data Defense Engineer doesn't crawl on a schedule. It observes continuously.

Code layer: Analyzes your codebase for data handling patterns. What gets logged? What goes to third parties? Runs on every commit, day or night.

Runtime layer: Watches actual data flows in production. Which APIs receive sensitive data? Where do requests go? Runs constantly, 24/7/365.

Pipeline layer: Tracks ETL jobs and transformations. What moved where? What temporary artifacts got created? Runs with every pipeline execution.

AI layer: Monitors training data sources, model inputs, outputs. What did models learn from? What do they reveal? Runs as models train and serve.

The result is complete Data Journeys, updated in real time. No gaps. No blind spots. No waiting until the next scheduled scan.

Why this isn't just a feature upgrade

Scanner vendors know about this gap. Many added "near real-time" features. They scan more often. They offer streaming integrations. But bolting continuous features onto a static architecture doesn't work. A scanner that runs hourly instead of daily is still a scanner. It still misses what happens between runs. It still can't see code. It still can't track runtime. It still goes idle between scans. A 24/7 Data Defense Engineer is architecturally different. Instrumentation, not crawling. Observation, not discovery. Always on, not periodically active.

You can't upgrade a scanner into an engineer. You have to rebuild from first principles.

Why it matters

Detection time.

Static scanning finds issues on the next run. Could be 23 hours later. Your Data Defense Engineer finds issues in seconds, any time of day.

Incident response.

Static scanning tells you what existed at the last scan. Your Data Defense Engineer shows you where data actually went, with complete lineage.

Prevention.

Static scanning is reactive. Your Data Defense Engineer catches risky code before deployment, even commits pushed at 3 AM.

AI governance.

Static scanning can't govern AI. It doesn't see AI systems. Your Data Defense Engineer follows data through training, observes behavior, monitors inference around the clock.

Your data moves continuously. Your data doesn't sleep. Your Data Defense Engineer doesn't either.

.png)