The AI Governance Imperative

Artificial intelligence governance has evolved from optional best practice to mandatory regulatory requirement. The EU AI Act, implemented in 2024, requires organizations to demonstrate continuous monitoring, risk assessment, and compliance validation for AI systems processing personal data. Similar regulations are emerging globally, creating urgent demand for governance technology that can meet these requirements.

Traditional Data Security Posture Management (DSPM) tools cannot adequately support AI governance frameworks because they were designed for static data environments rather than dynamic AI systems. Organizations implementing AI governance discover that existing DSPM solutions provide insufficient visibility, inadequate evidence collection, and limited AI-specific risk assessment capabilities. To see the technical foundation built specifically for dynamic AI systems, read our detailed Data Journeys Guide.

Key AI Governance Framework Requirements

Continuous AI System Monitoring

Regulatory Requirement: AI governance frameworks demand real-time monitoring of AI system behavior, data usage, and decision-making processes.

EU AI Act Specification: High-risk AI systems must implement "continuous monitoring procedures" and "automatic recording keeping systems" that track AI system performance and data usage.

Traditional DSPM Limitation: Batch-based scanning approaches cannot provide the continuous monitoring that AI governance frameworks require. Periodic assessments miss critical AI system behaviors that occur between scanning cycles.

Technical Gap: Traditional tools monitor data at rest but cannot track dynamic AI model inference, training data usage, or model-to-model communication that occurs continuously in production AI systems.

AI-Specific Risk Assessment

Regulatory Requirement: Organizations must assess and document AI-specific risks including bias, fairness, transparency, and algorithmic accountability.

Framework Standards: NIST AI Risk Management Framework and ISO/IEC 23053 require systematic assessment of AI risks that differ fundamentally from traditional data security risks.

Traditional DSPM Limitation: Existing tools assess data security risks but lack AI-specific risk models for bias detection, model drift, fairness evaluation, and algorithmic transparency.

Capability Gap: Traditional DSPM tools cannot evaluate whether AI models make biased decisions, drift from intended behavior, or process data in ways that violate ethical AI principles.

Algorithmic Transparency and Explainability

Regulatory Requirement: AI governance frameworks require organizations to explain AI system decision-making processes and demonstrate algorithmic transparency.

EU AI Act Article 13: High-risk AI systems must be "designed and developed in such a way to ensure that their operation is sufficiently transparent to enable users to interpret the system's output and use it appropriately."

Traditional DSPM Limitation: Existing tools focus on data classification and access control but cannot provide visibility into AI model decision logic, feature importance, or algorithmic reasoning processes.

Transparency Gap: Organizations cannot demonstrate compliance with explainability requirements when their monitoring tools cannot track which data influences AI decisions or how models process information.

Data Lineage for AI Training and Inference

Regulatory Requirement: Organizations must maintain comprehensive records of data used for AI model training, validation, and inference.

Governance Framework Need: Complete audit trails from training data sources through model deployment and production inference.

Traditional DSPM Limitation: Existing lineage tools track data movement through databases and applications but cannot follow data through AI model training pipelines, hyperparameter tuning, and inference serving systems.

AI Pipeline Blindness: Traditional tools lose visibility when data moves through machine learning platforms, model training clusters, and AI inference infrastructure.

Specific Regulatory Compliance Gaps

EU AI Act Compliance Challenges

Article 9 Risk Management: Requires "identification and analysis of the known and foreseeable risks" associated with AI system use.

- Traditional DSPM Gap: Cannot assess AI-specific risks like model bias, fairness violations, or algorithmic discrimination

- Compliance Impact: Organizations cannot demonstrate adequate AI risk assessment with traditional data security tools

Article 12 Record-Keeping: Mandates "automatic recording keeping systems" that log AI system operations and decisions.

- Traditional DSPM Gap: Batch processing approaches cannot provide automatic, continuous recording of AI system activities

- Evidence Collection: Traditional tools cannot generate the detailed audit logs that AI Act compliance requires

Article 13 Transparency: Requires AI systems to provide "clear and adequate information" about system capabilities and limitations.

- Traditional DSPM Gap: Cannot provide visibility into AI model behavior, decision logic, or performance characteristics

- Transparency Failure: Organizations cannot meet explainability requirements without AI-specific monitoring capabilities

GDPR and AI Intersection

Data Subject Rights: GDPR Article 22 gives individuals rights regarding automated decision-making.

- Traditional DSPM Gap: Cannot track which specific data points influence automated decisions or enable selective data deletion from AI models

- Compliance Challenge: Organizations cannot fulfill data subject requests when they cannot trace individual data through AI processing pipelines

Purpose Limitation: GDPR Article 5 requires data processing for specified, explicit, and legitimate purposes.

- Traditional DSPM Gap: Cannot monitor whether AI systems use data beyond originally specified purposes or detect purpose creep in AI applications

- Violation Risk: AI systems may violate purpose limitation without traditional monitoring tools detecting the compliance breach

Industry-Specific AI Governance

Financial Services: Regulatory requirements for explainable AI in credit decisions and risk assessment.

- Traditional DSPM Gap: Cannot provide evidence that AI lending decisions comply with fair lending requirements or demonstrate absence of discriminatory bias

- Regulatory Risk: Financial institutions face penalties for biased AI decisions that traditional monitoring cannot detect

Healthcare: FDA and other health regulators require AI system validation and continuous monitoring.

- Traditional DSPM Gap: Cannot track AI model performance degradation or detect when medical AI systems operate outside validated parameters

- Patient Safety: Traditional tools cannot ensure AI medical systems maintain safety and efficacy standards over time

Technical Capabilities Gap Analysis

AI Model Lifecycle Monitoring

Required Capability: Comprehensive tracking of AI models from development through deployment and retirement.

Traditional DSPM Limitation: Tools designed for application monitoring cannot track machine learning model lifecycles, version control, or deployment pipelines effectively.

Governance Impact: Organizations cannot demonstrate proper AI model governance without visibility into model development, testing, validation, and deployment processes.

Training Data Governance

Required Capability: Complete documentation and monitoring of data used for AI model training, including data sources, preprocessing, and quality validation.

Traditional DSPM Limitation: Existing tools classify training datasets but cannot track how data preprocessing affects model behavior or monitor training data quality over time.

Compliance Gap: Organizations cannot demonstrate training data compliance with governance frameworks when monitoring tools provide incomplete training pipeline visibility.

Inference Monitoring and Logging

Required Capability: Real-time monitoring of AI model inference requests, responses, and decision logic with complete audit trails.

Traditional DSPM Limitation: Tools monitor database queries and file access but cannot track AI inference patterns, model response characteristics, or decision reasoning.

Audit Failure: Traditional tools cannot generate the detailed inference logs required for AI governance compliance and algorithmic accountability.

Cross-Model Data Sharing

Required Capability: Tracking data flows between multiple AI models and systems, including model ensemble interactions and multi-model workflows.

Traditional DSPM Limitation: Existing tools cannot map data relationships between AI models or track how model outputs become inputs for other AI systems.

Governance Blindness: Organizations cannot assess cumulative AI risks when traditional tools provide no visibility into multi-model data processing workflows.

The AI Governance Technology Requirements

Real-Time AI System Monitoring

Technology Need: Stream processing capabilities that can monitor AI systems continuously rather than through periodic batch analysis.

Architecture Requirement: Integration with machine learning platforms, model serving infrastructure, and AI orchestration systems.

Operational Benefit: Immediate detection of AI system anomalies, policy violations, and performance degradation.

AI-Specific Risk Models

Technology Need: Risk assessment engines designed specifically for AI systems rather than traditional data security threats.

Model Requirements: Bias detection, fairness evaluation, model drift monitoring, and algorithmic accountability assessment.

Compliance Value: Automated evidence collection for AI-specific regulatory requirements and governance frameworks.

Model Explainability Integration

Technology Need: Integration with AI explainability tools and model interpretation systems.

Capability Requirement: Tracking feature importance, decision pathways, and model reasoning processes for audit and compliance.

Transparency Benefit: Automated generation of explanations for AI decisions to meet regulatory transparency requirements.

AI Pipeline Data Lineage

Technology Need: Specialized lineage tracking for machine learning workflows including data preprocessing, model training, and inference pipelines.

Technical Requirement: Integration with MLOps platforms, model registries, and AI infrastructure rather than traditional data management systems.

Governance Advantage: Complete audit trails for AI data processing that meet regulatory documentation requirements.

Implementation Challenges

Integration Complexity

Challenge: AI governance technology must integrate with machine learning platforms, data science tools, and AI infrastructure that traditional DSPM tools were never designed to support.

Technical Barrier: Different APIs, data formats, and monitoring interfaces across the AI technology stack.

Solution Requirement: Native integration with modern AI platforms rather than retrofitted traditional monitoring approaches.

Skills and Training Gap

Challenge: Security teams need AI-specific knowledge to implement and operate AI governance technology effectively.

Knowledge Requirements: Understanding of machine learning concepts, AI bias and fairness, model validation, and AI-specific regulatory requirements.

Training Investment: Organizations must invest in security team education on AI governance concepts and practices.

Operational Maturity

Challenge: AI governance requires operational processes and procedures that many organizations have not yet developed.

Process Development: Incident response for AI bias, model validation workflows, AI risk assessment procedures, and governance review processes.

Organizational Change: Integration of AI governance into existing security operations and compliance programs.

The Path to AI-Native Governance

Organizations cannot meet AI governance framework requirements with traditional DSPM tools designed for conventional data security. The capability gaps are fundamental and will only grow as AI adoption accelerates and regulatory requirements become more stringent.

Effective AI governance requires comprehensive solutions that address specific jobs to be done:

AI Inventory and Lifecycle Management

AI inventory management provides full lifecycle visibility into 1st party, 3rd party, and SaaS AI systems with automated asset classification and regulatory compliance mapping.

AI Security Posture Management

AI security posture management delivers dual-lens posture scoring for both security risks and governance gaps, with continuous hardening and audit-ready evidence collection.

AI Regulatory Compliance

AI regulatory mapping and compliance automation continuously aligns AI workflows with GDPR, EU AI Act, and NIST requirements while capturing audit-proof evidence in real-time.

AI Data Governance

AI data lineage connects training data provenance to runtime inference decisions, providing complete accountability and explainability for AI system behavior.

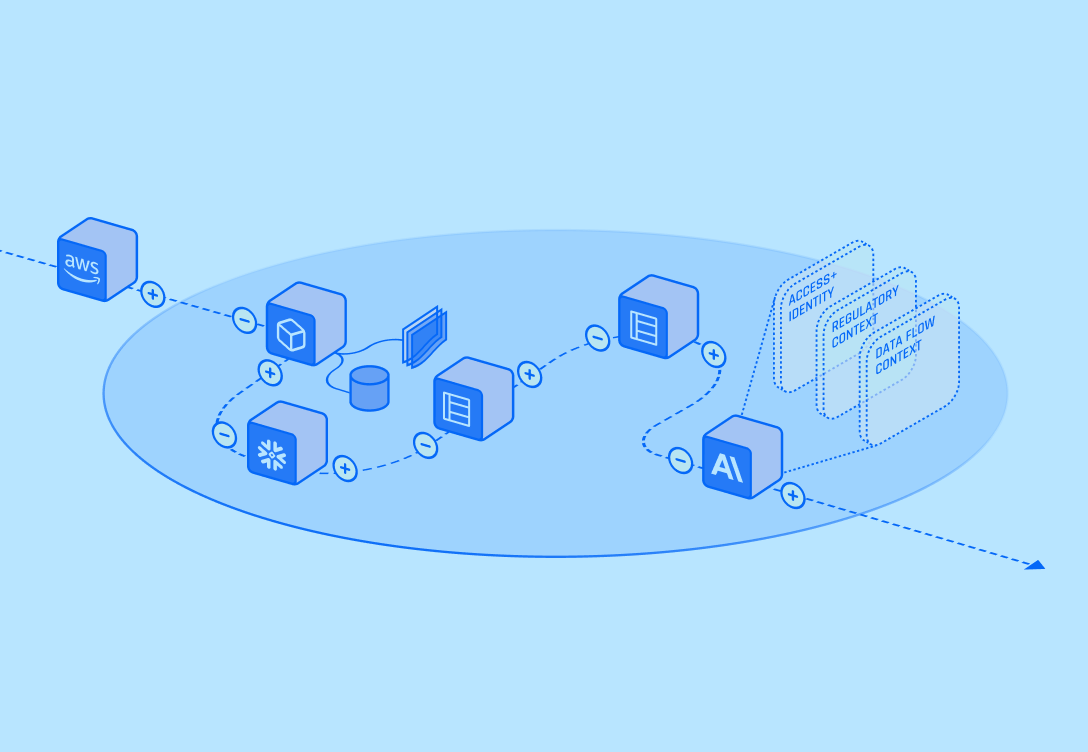

Data Journeys™ specifically designed for AI systems provide:

- Real-time monitoring of AI model behavior and data usage patterns

- AI-specific risk assessment and bias detection capabilities

- Complete data lineage through machine learning pipelines and inference systems

- Integration with AI explainability and model interpretation tools

- Automated evidence collection for AI regulatory compliance

The transition to AI-native governance is essential for organizations deploying AI systems at scale. Traditional DSPM approaches will leave critical governance gaps that expose organizations to regulatory violations, reputational damage, and operational risks.

Organizations implementing comprehensive AI governance solutions now will build competitive advantages through better AI risk management, faster regulatory compliance, and more confident AI innovation.